[ad_1]

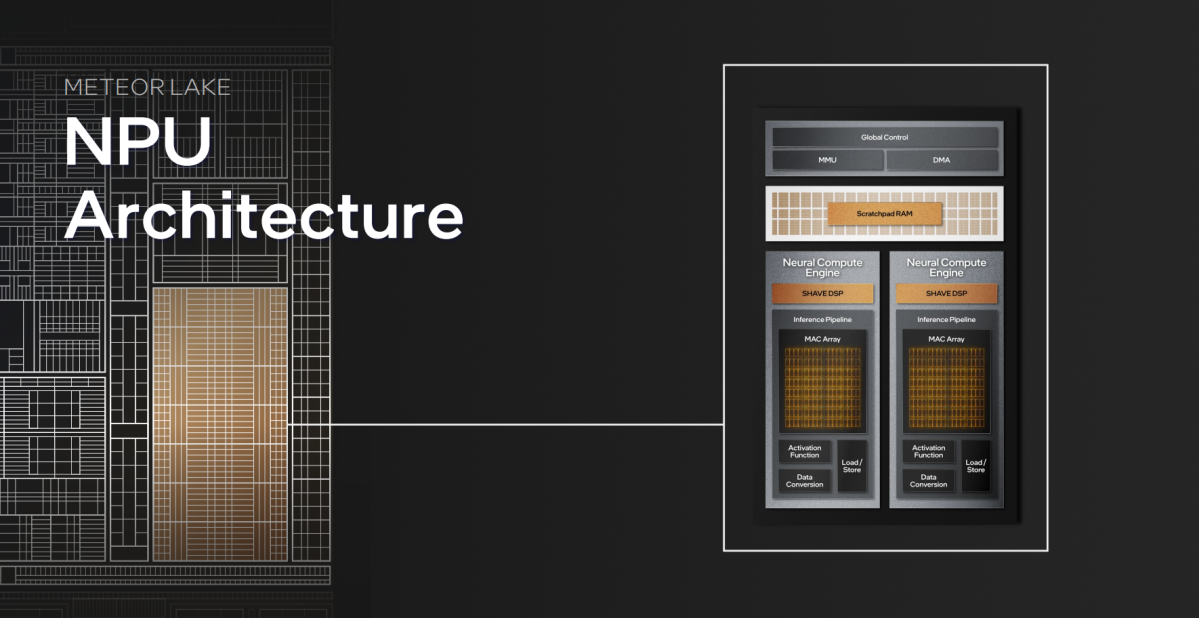

To the shock of precisely no one who has been following the PC business the final six months, “AI PCs” have been in every single place at CES 2024, powered by new chips like Intel’s Core Ultra and AMD’s Ryzen 8000 with devoted “Neural Processor Units” (NPUs). These assist speed up AI duties domestically, relatively than reaching out to cloud servers (like ChatGPT and Microsoft Copilot). But what does that really imply for you, an on a regular basis laptop consumer?

That’s the query I hoped to reply as I wandered the present flooring, visiting PC makers of all sizes and shapes. Most early implementations of native, NPU-processed software program has centered closely on creator workloads — bettering efficiency in instruments like Adobe Photoshop, DaVinci Resolve, and Audacity. But how can native AI assist Joe Schmoe?

After scouring the present, I can say that NPU enhancements aren’t particularly compelling but in these early days — although when you have an Nvidia GPU, you’ve already received highly effective, sensible AI at your fingertips.

But first, NPU-based AI.

Local AI takes child steps

The HP Omen Transcend.

IDG / Matthew Smith

Frankly, NPU-driven AI isn’t compelling but, although it could actually pull off some cool parlor methods.

HP’s new Omen Transcend 14 confirmed off how the NPU can be utilized to dump video-streaming duties whereas the GPU ran Cyberpunk 2077 — nifty, to make certain, however as soon as once more centered on creators. Acer’s Swift laptops fortunately take a extra sensible angle. They combine Temporal Noise Reduction and what Acer calls PurifiedView and PurifiedVoice 2.0 for AI-filtered audio and video, with a three-mic array, and there are extra AI capabilities promised to return later this 12 months.

MSI’s stab at native AI additionally tackles cleansing up Zoom and Teams calls. A Core Ultra laptop computer demo confirmed Windows Studio Effects tapping the NPU to mechanically blur the background of a video name. Next to it, a laptop computer arrange with Nvidia’s superior AI-powered Broadcast software program was doing the identical. The Core Ultra laptop computer used dramatically much less energy than the Nvidia pocket book, because it didn’t want to fireplace up a discrete GPU to course of the background blur, shunting the duty to the low-power NPU as an alternative. So that’s cool — and in contrast to RTX Broadcast, it doesn’t require you to have a GeForce graphics card put in.

Just as virtually, MSI’s new AI engine intelligently detects what you’re doing in your laptop computer and dynamically adjustments the battery profile, fan curves, and show settings as wanted for the duty. Play a recreation and every thing will get cranked; begin slinging Word docs and every thing ramps down. It’s cool, however present laptops already do that to a point.

MSI additionally confirmed off a nifty AI Artist app, operating on the favored Stable Diffusion native generative AI artwork framework, that permits you to create photos from textual content prompts, create appropriate textual content prompts from photos you plug in, and create new photos from photos you choose. Windows Copilot and different generative artwork providers can already do that, after all, however AI Artist performs the duty domestically and is extra versatile than merely slapping phrases right into a field to see what photos it could actually create.

Lenovo’s imaginative and prescient for NPU-driven AI appeared probably the most compelling. Dubbed “AI Now,” this textual content input-based suite of options appears genuinely helpful. Yes, you should use it to generate photos, natch — however you may also ask it to mechanically set these photos as your wallpaper.

More helpfully, typing prompts like “My PC config” immediately brings up {hardware} details about your PC, eradicating the necessity to dive into arcane Windows sub-menus. Asking for “eye care mode” allows the system’s low-light filter. Asking it to optimize battery life adjusts the facility profile relying in your utilization, much like MSI’s AI engine.

Those are helpful, albeit considerably area of interest, however the characteristic that impressed me most was Lenovo’s Knowledge Base characteristic. You can prepare AI Now to sift by way of paperwork and recordsdata saved in an area “Knowledge Base” folder, and shortly generate experiences, synopses, and summaries based mostly on solely the recordsdata inside, by no means touching the cloud. If you stash all of your work recordsdata in it, you may, for example, ask for a recap of all progress on a given challenge over the previous month, and it’ll shortly generate that utilizing the data saved in your paperwork, spreadsheets, et cetera. Now this appears actually helpful, mimicking cloud AI-based Office Copilot options that Microsoft expenses companies an arm for a leg for.

But. AI Now is at the moment within the experimental stage, and when it launches later this 12 months, it should come to China first. What’s extra, the demo I noticed wasn’t truly operating on the NPU but — as an alternative, Lenovo was utilizing conventional CPU grunt for the duties. Sigh.

And that’s my core takeaway from the present. NPUs are solely simply beginning to seem in computer systems, and the software program that faucets into them ranges from gimmicky to “way too early” — it’ll take time for the rise of the so-called “AI PC” to develop in any sensible sense.

Unless you have already got an Nvidia graphics card put in, that’s.

The AI PC is already right here with GeForce

Thiago Trevisan/IDG

Visiting Nvidia’s suite after the laptop computer makers drove dwelling that the AI PC is, in truth, already right here when you’re a GeForce proprietor.

That shouldn’t come as a shock. Nvidia is on the forefront of AI growth as has been driving the section for years. Features like DLSS, RTX Video Super Resolution, and Nvidia Broadcast are all tangible, sensible real-world AI functions that customers love and use each day. There’s a purpose Nvidia can cost a premium for its graphics playing cards.

The firm was exhibiting off some cool cloud-based AI instruments — its ACE character engine for game NPCs can now maintain full-blown generative chats about something, in a wide range of languages, and its iconic Jinn character did not admire after I advised him his ramen sucked — however I wish to give attention to the native AI instruments, since that’s the purpose of this text.

A lineup of creator-focused Nvidia Studio laptops have been on-hand exhibiting simply how highly effective GeForce’s devoted ray tracing and AI tensor cores will be at accelerating creation duties, akin to real-time picture rendering or eradicating gadgets from images. But once more, whereas that’s superb for creators, it’s of little sensible profit to on a regular basis customers.

Two different AI demos are.

One, a complement to the prevailing RTX Video Super Resolution characteristic that makes use of GeForce’s AI tensor cores to upscale and beautify low-resolution movies, focuses on utilizing AI to translate commonplace dynamic vary video into excessive dynamic vary (HDR). Dubbed RTX Video HDR, it appeared actually transformative in demos I witnessed. The overly crushed darks in a Game of Thrones scene brought on by video compression have been cleared up and brightened utilizing the characteristic, delivering a surprising enhance in picture high quality. It was an analogous story in an underground scene from one other nonetheless, the place the again of a subway was darkish past comprehension, however RTX Video HDR allow you to pick a tunnel, rubbish cans, and different hidden points earlier misplaced to the gloom. It appears nice and must be arriving in a GeForce driver later this month.

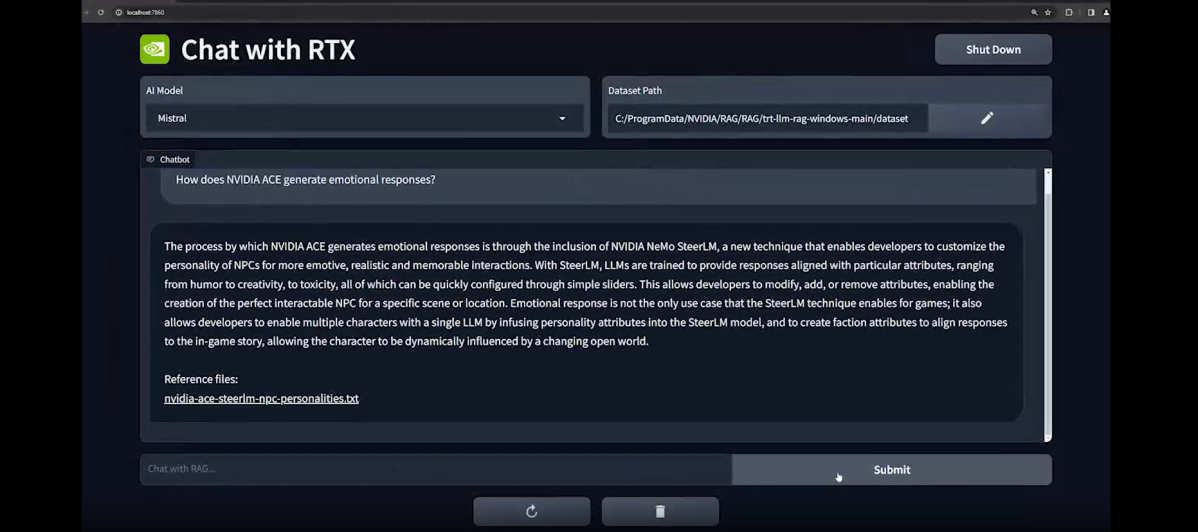

Chat with RTX was in a position to present an correct description of Nvidia’s new ACE options after being pointed to an area file.

Nvidia

Then there was Chat with RTX, which actually impressed me. Most AI chatbots bump your requests out to the cloud, the place they’re processed on firm servers after which despatched again to you. Not Chat with RTX, an upcoming software that runs on both the Mistal or Llama LLMs. The key factor right here is it may also be educated in your native textual content, PDF, doc, and XML recordsdata, so you’ll be able to ask the appliance questions on your particular info and desires — and all of it runs domestically. You can ask it questions like, “Where did Sarah say I should go to dinner next time I’m in Vegas?” and have the reply pop proper up out of your recordsdata.

Better but, because it runs domestically, the solutions in our demos appeared a lot quicker than the solutions generated by cloud-based LLMs like ChatGPT, and you may also level Chat with RTX to particular YouTube movies to ask questions on its content material, or to obtain a basic abstract. Chat with RTX scans the YouTube-provided transcript for that specific video and your reply seems in seconds. It is rad.

Chat with RTX must also be out in demo kind too, and Nvidia is releasing its backbones in order that builders can create new applications that faucet into it.

Compared to the AI demos I noticed for NPU functions, the options on show at Nvidia’s sales space felt each extra sensible and way more highly effective. Nvidia reps advised me that was a purpose for the corporate; to point out that AI PCs exist already, and may drive genuinely helpful experiences — when you have a GeForce GPU, after all.

Bottom line

Intel

And that’s actually my overarching takeaway on the AI PC from CES 2024. Will AI quantity to greater than former buzzwords like “blockchain” and “the metaverse,” which fizzled out in spectacular vogue? I feel so. Companies like Nvidia are already utilizing it to superb impact. But NPUs are virtually nonetheless in diapers and studying to speak. There’s no overly compelling sensible characteristic that faucets into them but until you’re a content material creator.

Don’t get me mistaken: The future appears probably shiny for native NPUs as a complete — your entire computing business is willing it into being before our very eyes — however in order for you a real AI PC proper now, one with tangible, sensible advantages for on a regular basis laptop customers, you’re higher off investing in a tried-and-true Nvidia RTX GPU than a chip with a newfangled NPU.

[adinserter block=”4″]

[ad_2]

Source link