[ad_1]

Hey you, cyberpunk wunkderkind able to shift all of the paradigms and get away of each field you’ll find. Do you wish to run a super-powerful, mind-boggling synthetic intelligence proper by yourself pc? Well you can, and also you’ve been in a position to for a while. But now Nvidia is making it tremendous straightforward, barely an inconvenience to take action, with a preconfigured generative textual content AI that runs off its consumer-grade graphics playing cards. It’s referred to as “Chat with RTX,” and it’s accessible as a beta proper now.

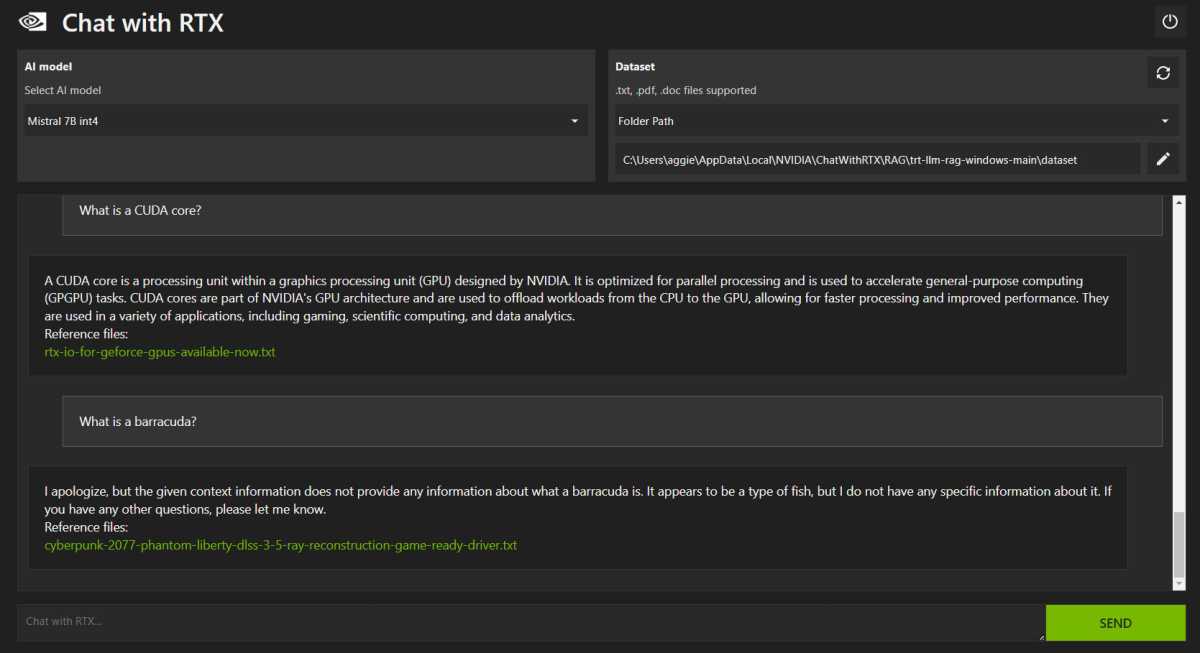

Chat with RTX is solely text-based, and it comes “trained” on a big database of public textual content paperwork owned by Nvidia itself. In its uncooked type the mannequin can “write” properly sufficient, however its precise information seems to be extraordinarily restricted. For instance, it may give you an in depth breakdown of what a CUDA core is, however once I requested, “What is a baraccuda?” it answered with, “It appears to be a type of fish” and cited a Cyberpunk 2077 driver replace as a reference. It might simply give me a seven-verse limerick about a gorgeous printed circuit board (not an particularly good one, thoughts you, however one which fulfilled the immediate), however couldn’t even try to inform me who gained the War of 1812. If nothing else, it’s an instance of how deeply massive language fashions rely upon a huge array of data input in an effort to be helpful.

Michael Crider/Foundry

To that finish, you may manually lengthen Chat with RTX’s capabilities by pointing it to a folder filled with .txt, .pdf, and .doc information to “learn” from. This could be a little bit extra helpful if you want to search via gigabytes of textual content and also you want context on the similar time. Shockingly, as somebody whose whole work output is on-line, I don’t have a lot in the best way of native textual content information for it to crawl.

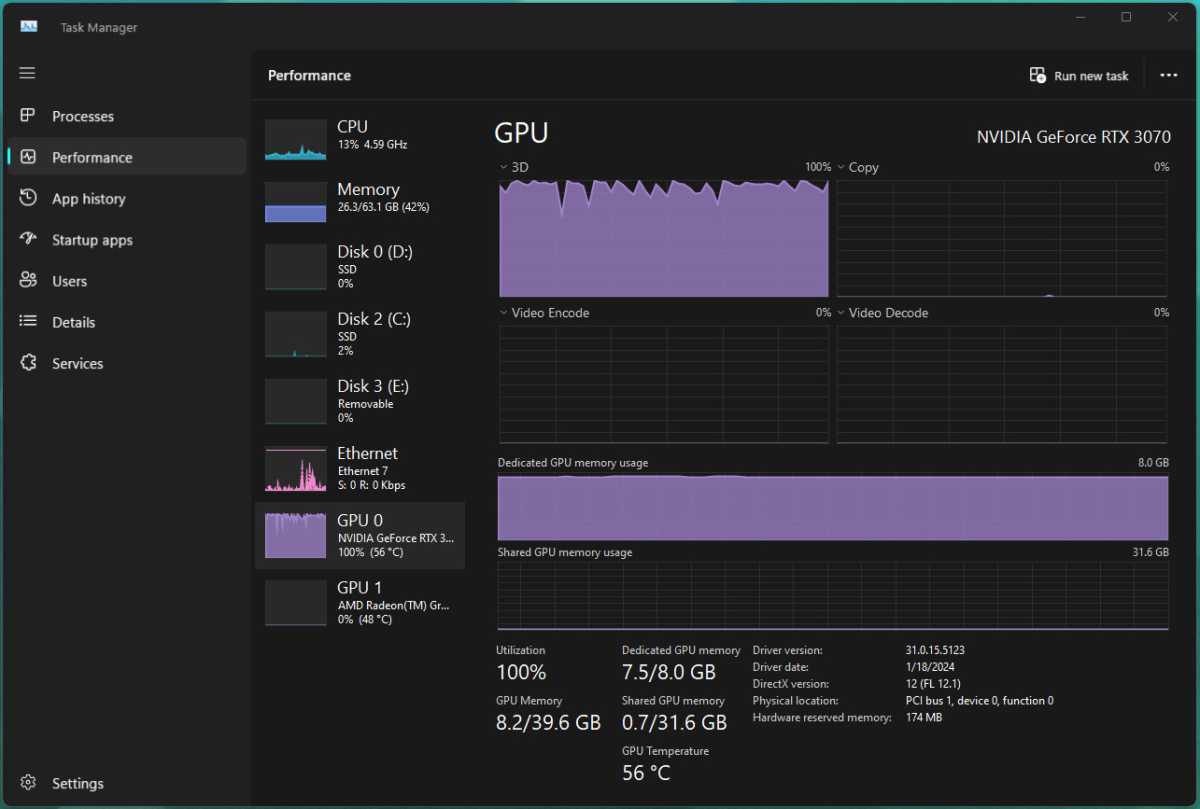

Pictured: My graphics card studying the Bible. Hard.

Michael Crider/Foundry

To check out this doubtlessly extra helpful functionality, I downloaded publicly accessible textual content information of varied translations of the Bible, hoping to provide Chat with RTX some quizzes that my previous Sunday School academics would in all probability be capable of nail. But after an hour the instrument was nonetheless churning via lower than 300MB of textual content information and operating my RTX 3070 at almost 100%, with no sign of ending, so extra qualitative analysis should wait for one more day.

In order to run the beta, you’ll want Windows 10 or 11 and an RTX 30- or 40-series GPU with a minimum of 8GB of VRAM. It’s additionally a pretty massive 35GB download for the AI program and its database of default coaching supplies, and Nvidia’s file server appears to be getting hit laborious in the mean time, so simply getting this factor up in your PC could be an train in endurance.

[adinserter block=”4″]

[ad_2]

Source link