[ad_1]

A prime AMD govt says that the corporate is considering deeper integration of AI throughout the Ryzen product line, however one ingredient is lacking: consumer purposes and working methods that truly reap the benefits of them.

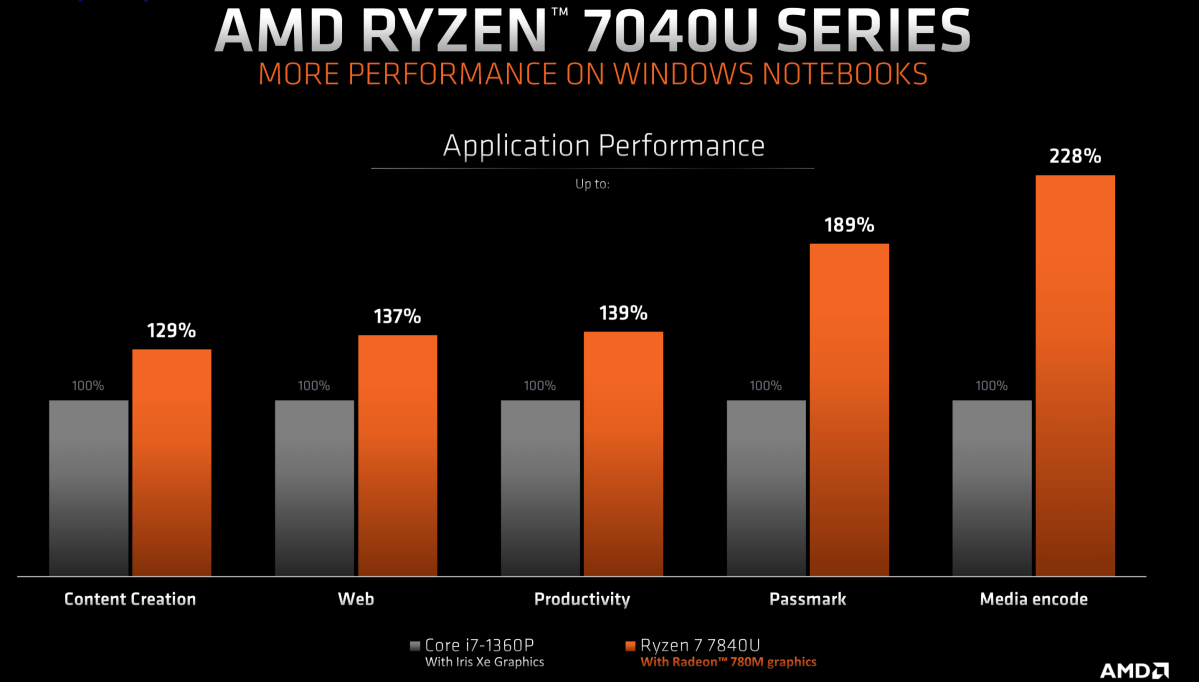

AMD launched the Ryzen 7000 series in January, which incorporates the Ryzen 7040HS. In early May, AMD announced the Ryzen 7040U, a lower-power model of the 7040HS. Both are the primary chips to incorporate Ryzen AI “XDNA” {hardware}, among the many first occurrences of AI logic for PCs.

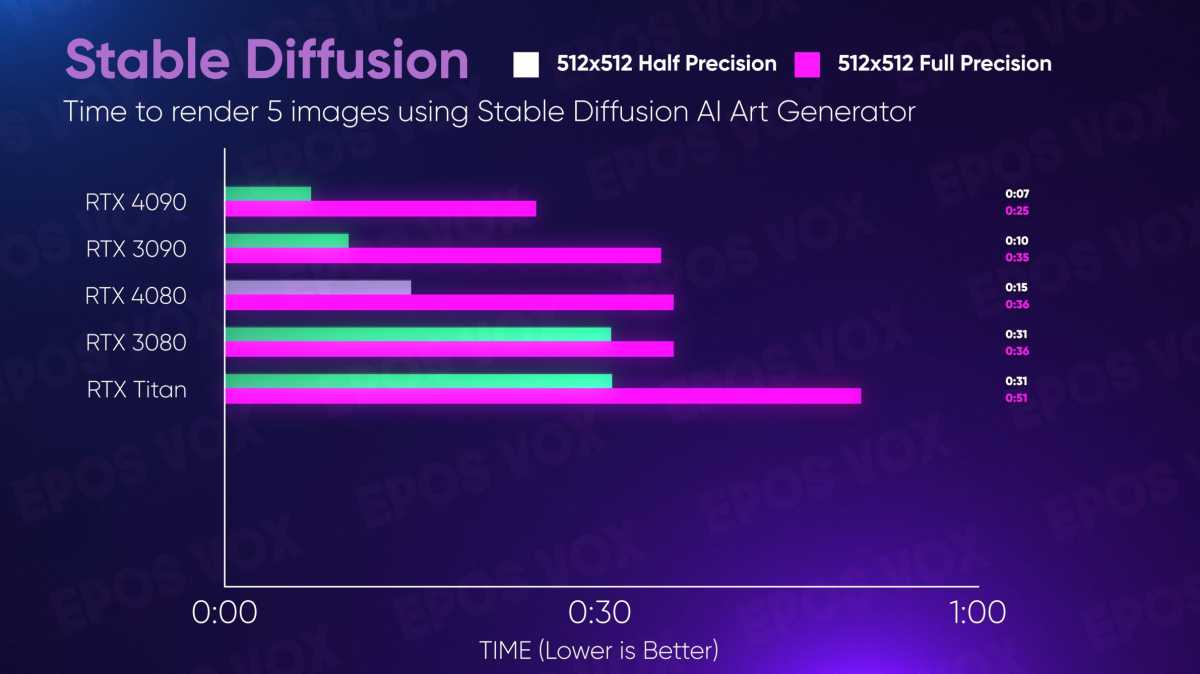

So far, nonetheless, AI stays a service that (aside from Stable Diffusion and a few others) solely runs within the cloud. That makes AMD’s enterprise dangerous. Why dedicate expensive silicon in direction of Inference Processing Units (IPUs), advancing a operate that nobody can actually use on the PC?

That’s one of many questions we put to David McAfee, the company vp and basic supervisor for the consumer channel enterprise at AMD. The message is, primarily, that you just’ll must belief them—and even reframe the best way you concentrate on AI.

According to McAfee, “we’re on the cusp of a series of announcements and events that will help shed more light into what’s happening with AI processing in general,” he stated. “We really view those as the tip of the iceberg.”

It’s not clear if McAfee was referring to Google I/O, the Microsoft Build developer convention later this month, or one thing else completely. But AMD appears to be planning to take a extra bite-sized strategy to AI than you would possibly count on.

AMD: AI on the PC will probably be much less complicated than you suppose

Sure, you’ll be able to run an AI mannequin on prime of a Ryzen CPU or a Radeon GPU, supplied that you’ve sufficient storage and reminiscence. “But those are pretty heavy hammers to use when it comes to doing that type of compute,” McAfee stated.

Instead, AMD sees AI on the PC as small, gentle, duties that continuously set off and run on an AI processor often known as an Inference Processing Unit (IPU). Remember, AMD has used “AI” for a while to try to optimize its expertise in your PC. It teams a number of applied sciences below the label of “SenseMI” for Ryzen processors, which adjusts clock speeds utilizing Precision Boost 2, mXFR, and Smart Prefetch. IPUs might take that to a different degree.

“I think one of the nuances that comes along with the way that we’re looking at at IPUs, and IPUs for the future, is more along the lines of that combination of a very specialized engine that does a certain type of compute, but does it in a very power-efficient way,” McAfee stated. “And in a way that’s really integrated with the memory subsystem and the rest of the processor, because our expectation is as time goes on, these workloads that run on the GPU will not be sort of one-time events, but there’ll be more of a — I’m not going to say a constantly running set of workstreams, but it will be a more frequent type of compute that happens on your platform.”

AMD sees the IPU as one thing like a video decoder. Historically, video decoding might be brute-forced on a Ryzen CPU. But that requires an infinite quantity of energy to allow expertise. “Or you can have this relatively small engine that’s a part of the chip design that does that with incredible efficiency,” McAfee stated.

That in all probability means, no less than for now, that you just received’t see Ryzen AI IPUs on discrete playing cards, and even with their very own reminiscence subsystem. Stable Diffusion’s generative AI mannequin makes use of devoted video RAM to run in. But when requested concerning the idea of “AI RAM,” McAfee demurred. “That sounds really expensive,” he stated.

AI’s future inside Ryzen

XDNA is to Ryzen AI the best way the RDNA is to Radeon: the primary time period defines the structure, the second defines the model. AMD acquired its AI capabilities by way of its Xilinx acquisition, nevertheless it nonetheless hasn’t detailed what precisely is in it. McAfee acknowledged that the AMD and its opponents have work to do in defining Ryzen AI’s capabilities in phrases that lovers and customers can perceive, such because the variety of cores and clock speeds that assist outline CPUs.

“There is, let’s call it an architecture generation, that goes along with an IPU,” McAfee stated. “And what we integrate this year versus what we integrate in a future product will likely have different architecture generations associated with them.”

AMD

The drawback is that AI metrics — whether or not it’s core counts per parallel streams or neural layers — simply haven’t been defined for customers, and there aren’t any typically accepted AI metrics past trillions of operations per second (TOPS), and TOPS per watt.

“I think that we probably haven’t gotten to the point where there’s a good set of industry standard benchmarks or industry standard metrics to help users better understand Widget A from AMD versus Widget B from Qualcomm,” McAfee stated. “I’d agree with you that the language and the benchmarks are not easy for users to understand which one to pick right now and which one they should be betting on.”

With Ryzen AI deployed to only a pair of Ryzen laptop computer processors, the pure query is how AMD will start distributing it to the remainder of the CPU lineup. That, too, is being mentioned, McAfee stated. “I think we’re we’re having the AI conversation all the way across the Ryzen product line,” McAfee stated.

Because of the additional price connected to the manufacturing the Ryzen AI core, AMD is evaluating what worth Ryzen AI provides, particularly in its finances processors. McAfee stated that the end-user profit “has to be a lot more concrete” earlier than AMD would add Ryzen AI to its low-end cellular Ryzen chips.

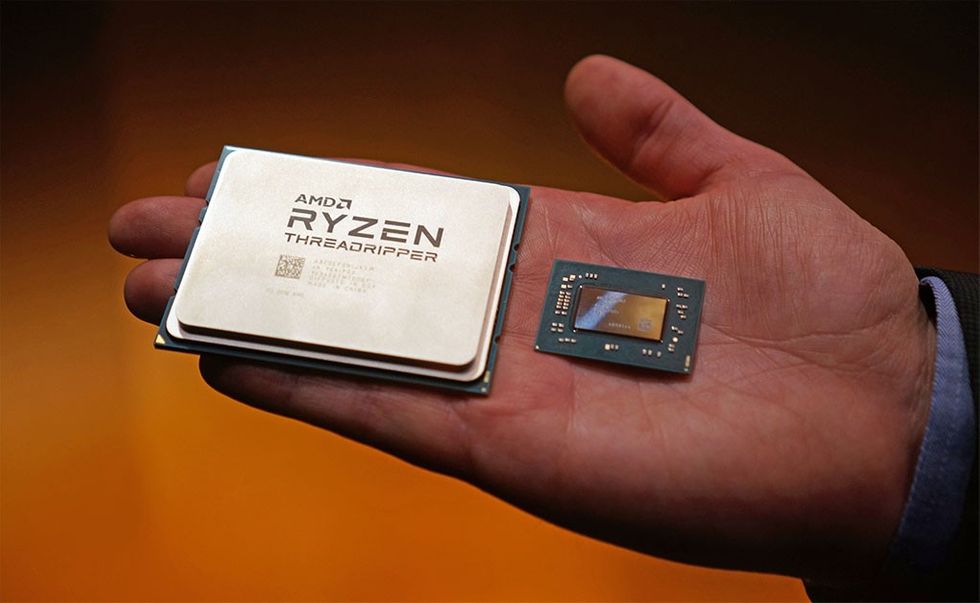

Will AMD add Ryzen AI to its desktop Ryzens? That, considerably surprisingly, is much less certain. McAfee thought of the query when it comes to the desktop’s energy effectivity. Because of the ability of the desktop, “maybe Ryzen AI becomes more of a testing tool as opposed to something that is driving the everyday value of the device,” he stated. It’s potential {that a} high-core-count Threadripper might be used to coach AI, however not essentially use it, he added.

AMD

AMD does consider, although, that AI will sit on the desk at the moment occupied by CPUs and GPUs, nonetheless.

“I really do believe there will be a point in time in the not-too-distant future where yes, as people think about the value of their system, it’s not just my CPU and my GPU, this, this becomes a third element of compute that that does add significant value to their platform,” McAfee stated.

AI’s subsequent steps

Chip evolution has usually adopted a reasonably easy development. Developers give you a brand new app, and program it to run on a basic objective CPU. Over time, the business settles on a selected job (video video games, say) and specialised {hardware} follows. Inferencing chips within the datacenter have been developed for years, however app builders are nonetheless determining what AI can do, not to mention what customers can use it for.

At that time, McAfee says, there will probably be two causes for AI purposes to run in your PC, fairly than within the cloud. “There will be a point in time where those models reach a level of maturity or reach a practical application where it becomes the right step for the developer to quantize that model and to put it on, you know, local AI accelerators that live on a mobile PC platform for, you know, the battery life benefits,” he stated.

Adam Taylor/IDG

The different purpose? Security, McAfee added. It’s doubtless that as AI is built-in into enterprise life, that companies and even customers will need their very own non-public AI companies, in order that their private or enterprise knowledge doesn’t leak into the cloud. “I don’t want a public-facing instance scanning all of my email and documents, and potentially using that,” he stated. “No.”

Software’s accountability

McAfee averted disclosing what he knew of Microsoft’s roadmap in addition to the hypothesis that Windows 12 may be more closely integrated with AI. Consumer AI purposes could embrace video games, equivalent to NPCs which have clever conversations, fairly than scripted dialogue.

“I think that’s going to be the key,” McAfee stated. “Over the next three years, the software and user experiences have to have to deliver that value, and move this from a really exciting, emergent technology that’s just making its way into the conversation on PCs into something that potentially is rather transformational,” he stated.

But, McAfee added, AMD, Intel, Qualcomm, and the remainder of the {hardware} business can’t be solely liable for the success or failure of AI.

“Ultimately for this to be successful… It really boils down to, you know, does the software live up?” McAfee stated. “Does the software and the user experience live up to the hype? I think that’s going to be the key. Over the next three years, the software and user experiences have to have to deliver that value, and move this from a really exciting, you know, sort of emergent technology that’s just making its way into the conversation on PCs, into something that it potentially is rather transformational to the way that we think about performance, and devices, and how we use them.”

[adinserter block=”4″]

[ad_2]

Source link