[ad_1]

Ghulam Jallani

In current occasions, the emergence of deepfake expertise has sparked considerations globally, with its potential to govern actuality and public notion. The situation got here to the forefront when a deepfake video that includes widespread Bollywood actress Rashmika Mandanna went viral. The implications of such technological developments are deeply unsettling, urging a collective response to handle the moral, authorized, and societal challenges they pose.

The Rashmika Mandanna Deepfake Incident: A Disturbing Wake-Up Call

The current deepfake video that includes Rashmika Mandanna getting into an elevator has raised alarms in regards to the misuse of this expertise. The girl within the video, Zara Patel, expressed her profound misery over being unwittingly concerned within the creation of the deepfake. In response, Patel took to Instagram to convey her considerations, emphasizing the necessity for heightened warning when navigating social media platforms.

Rashmika Mandanna herself voiced her misery, deeming the incident “extremely scary.” The potential hurt brought on by such deepfake movies goes past private discomfort, extending to the broader vulnerabilities that people face as a result of misuse of expertise.

Global Outcry and Calls for Legal Action

Notably, veteran actor Amitabh Bachchan, who co-starred with Mandanna within the movie ‘Goodbye,’ referred to as for authorized motion in opposition to the creators of the deepfake video. Union Minister Rajeev Chandrasekhar harassed the authorized obligations of social media platforms to fight misinformation and urged stringent measures in opposition to the dissemination of deepfakes.

This incident has introduced into sharp focus the pressing want for complete authorized frameworks to deal particularly with the challenges posed by deepfake expertise. The absence of particular rules in India emphasizes the need for proactive measures to guard people and the broader digital panorama.

Understanding Deepfake Technology and its Global Implications

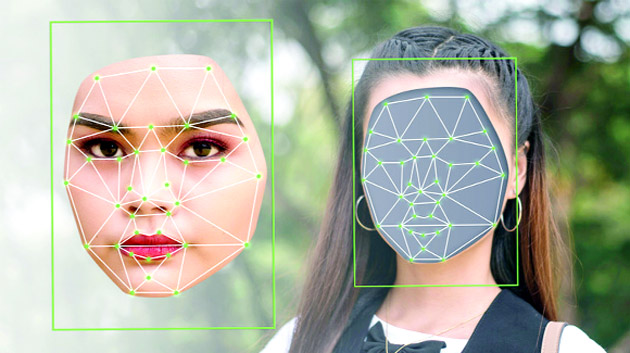

Deepfake expertise, a product of highly effective computer systems and deep studying, permits the manipulation of movies, photos, and audio. The time period ‘deepfake’ originated in 2017, gaining notoriety for its misuse in creating fabricated content material, typically for malicious functions. One of essentially the most regarding functions is the creation of artificial media, corresponding to deepfake movies, the place the face or voice of 1 individual is seamlessly changed with one other.

The international response to deepfake challenges varies. The European Union has up to date its Code of Practice on Disinformation to counter deepfakes, imposing fines on tech corporations that fail to conform. In the United States, the bipartisan Deepfake Task Force Act goals to help the Department of Homeland Security in countering deepfake expertise. China has launched complete rules to curb disinformation, mandating clear labeling and traceability of deep synthesis content material.

India’s Stand and the Need for Comprehensive Legislation

In India, present legal guidelines corresponding to Sections 67 and 67A of the Information Technology Act (2000) could be utilized to sure facets of deepfakes. However, there’s a obvious absence of particular rules addressing the distinctive challenges posed by this expertise. The Personal Data Protection Bill (2022) might provide some safety in opposition to the misuse of non-public information however falls wanting explicitly addressing deepfakes.

To successfully fight the menace of deepfakes, India should develop a sturdy authorized framework that considers the potential implications for privateness, social stability, nationwide safety, and democracy. With the speedy evolution of expertise, legislative measures should preserve tempo to safeguard people and society at massive.

Global Collaboration and Technological Solutions

Addressing the deepfake dilemma requires a multifaceted method. Social media platforms should put money into AI-powered algorithms to detect and flag manipulated content material swiftly. Collaboration with fact-checking organizations can present extra layers of verification and counter the unfold of false info.

Blockchain expertise emerges as a possible resolution for creating an unchangeable report of digital media, guaranteeing transparency in verifying authenticity. This decentralized method may discourage the creation and dissemination of malicious deepfakes.

The Way Forward

As society grapples with the ramifications of deepfake expertise, a number of measures can pave the best way for a safer digital future:

AI-Powered Social Media Fact-Checking: Engage social media platforms to put money into AI-powered algorithms that mechanically detect and flag doubtlessly manipulated content material.

Blockchain-based Deepfake Verification: Leverage blockchain expertise to create an immutable report of digital media, enhancing transparency in verifying authenticity.

Deepfake Impact Mitigation Policy: Establish a fund to help people and organizations affected by deepfakes, offering help and redressal mechanisms.

Deepfake Accountability Act (DAA): Introduce laws targeted on addressing the challenges posed by deepfakes, guaranteeing accountability of their creation and distribution.

Punishments and Public Awareness Campaigns: Implement legal guidelines that punish bad-faith actors and defend people from digital manipulation. Public consciousness campaigns on media literacy are essential to fight the unfold of deepfakes.

In navigating the perils of deepfake expertise, international vigilance and collaborative efforts are paramount. The Rashmika Mandanna incident serves as a stark reminder of the urgency to handle this evolving menace comprehensively. As expertise continues to advance, societies should adapt swiftly to safeguard the integrity of digital areas and defend people from the nefarious impacts of deepfakes.

[adinserter block=”4″]

[ad_2]

Source link