[ad_1]

It’s not sufficient to champion AI {hardware} that helps native massive language fashions, generative AI, and the like. Hardware distributors must step up and function a intermediary — if not an outright developer — for these native AI apps, too.

Qualcomm virtually has it. At MWC 2024 (previously often known as Mobile World Congress, aka one of many world’s largest cell commerce exhibits), the corporate this week introduced a Qualcomm AI Hub, a repository of greater than 75 AI fashions particularly optimized for Qualcomm and Snapdragon platforms. Qualcomm additionally confirmed off a seven-billion-parameter native LLM, operating on a (presumably Snapdragon-powered) PC, that may settle for audio inputs. Finally, Qualcomm demonstrated a further seven-billion-parameter LLM operating on Snapdragon telephones.

That’s all effectively and good, however extra PC and chip distributors should reveal real-world examples of AI. Qualcomm’s AI Hub is an effective begin, even when it’s a hub for builders. But the one means that chip and PC distributors are going to persuade customers to make use of native AI is to make it simple, low-cost, and so, so obtainable. Yet only a few have stepped up to take action.

The PC trade tends to grab upon any development it may as a result of PC {hardware} gross sales are perpetually undercut by smartphones, the cloud, and different units that threaten its dominance. While laptop computer and Chromebook gross sales soared through the pandemic, they’ve come again to earth, arduous. The argument that you just’ll want a neighborhood PC to run the following massive factor — AI — and pay for high-end {hardware} is an argument ought to have the PC trade slavering.

But the primary examples of AI ran within the cloud, which places the PC trade behind. This appears like a degree I harp upon, however I’ll say it once more: Microsoft doesn’t appear notably invested in native AI fairly but. Everything with Microsoft’s “Copilot” model in its title runs within the cloud, and usually requires both a subscription or at the least a Microsoft account to make use of. (Copilot can be utilized with a Windows native account, however only for a restricted variety of instances earlier than forcing you to register to proceed utilizing it.)

Most individuals most likely aren’t essentially satisfied that they should use AI in any respect, not to mention operating regionally on their PC. This is the issue chip and {hardware} distributors want to unravel. But the answer isn’t {hardware} — it’s software program.

Mark Hachman / IDG

The reply is apps: tons and plenty of apps

Microsoft launched an AI Hub to the Microsoft Store app final yr, however even at the moment it feels slightly lackluster. Most of the chatbot “apps” obtainable truly run within the cloud and require a subscription — which is not sensible, after all, when Copilot is basically free. Ditto for apps like Adobe Lightroom and ACDSee; they’re subscription-based, too, which is what a neighborhood app may keep away from by tapping the facility of your native PC.

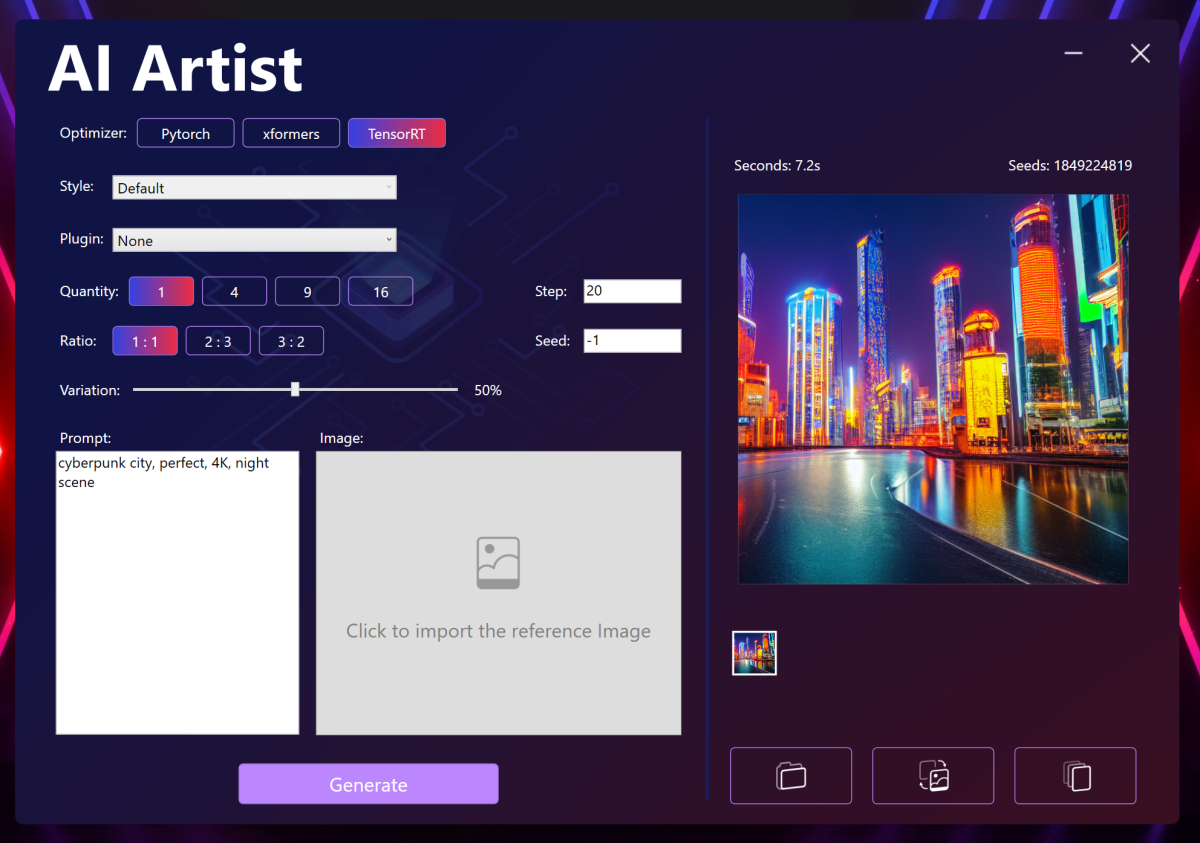

That leaves {hardware} distributors to hold the torch. And some have: MSI, for instance, makes an “AI Artist” generative AI app obtainable for its latest MSI Raider GE78 gaming laptop. While it’s slightly clunky and sluggish, at the least it supplies a one-click set up process from a trusted vendor.

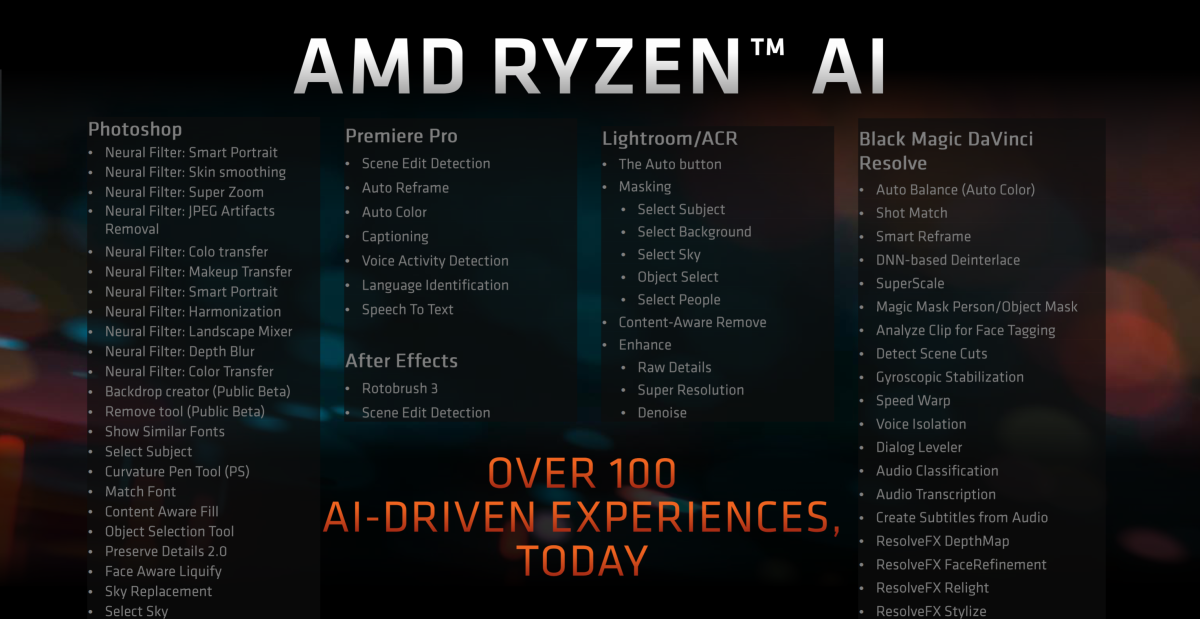

But that’s an oasis in a desert of native AI. Both AMD and Intel tout the efficiency of their chips on AI language fashions like Llama 2. That is smart for many who have already got tried out AI chatbots and are aware of a number of the numerous fashions and the way they work. AMD, for instance, laid out particularly what functions reap the benefits of its personal NPU, which it manufacturers as Ryzen AI.

With respect, that’s not fairly adequate. It isn’t sufficient that Intel launched an AI development fund final yr. What chip distributors must do is get them into the patron’s palms.

How? One technique is already tried and true: trial subscriptions of AI-powered apps like Adobe Photoshop, BlackMagic’s DaVinci Resolve, or Topaz. Customers historically don’t like bloatware, however I feel that if the PC trade goes to market AI PCs, they’re going to must make a stab at a creator-class PC that leans into it. Instead of operating with “Intel Inside,” begin advertising “AI bundles.” Lean into the software program, not the {hardware}. Start placing app logos on the skin of the field, too. Would Adobe be prepared to place its stamp on a “Photoshop-certified” PC? It’s a thought.

Otherwise, I’d recommend one of many higher concepts that Intel had: a return of the gaming bundle. Today, each Intel and AMD could bundle a recreation like Assassins’ Creed: Mirage with the acquisition of a qualifying CPU or motherboard. But not too way back, you could possibly obtain a number of video games, totally free, that may showcase the facility of the CPU. (Here’s an MSI example from 2018, under.)

Running AI regionally does supply some compelling benefits: privateness, for one. But the comfort issue of Copilot and Bard is a robust argument to make use of these sanitized instruments as an alternative. Consumers are fickle and received’t care, both, except somebody exhibits them they need to.

If AMD, Intel, and ultimately Qualcomm plan to make native AI a actuality, they’re going to must make that possibility easy, low-cost, and ubiquitous. And with the AI hype practice already barreling full pace forward, they should do it yesterday.

[adinserter block=”4″]

[ad_2]

Source link