[ad_1]

Psychological traits and knowledge assortment and evaluation in boxing

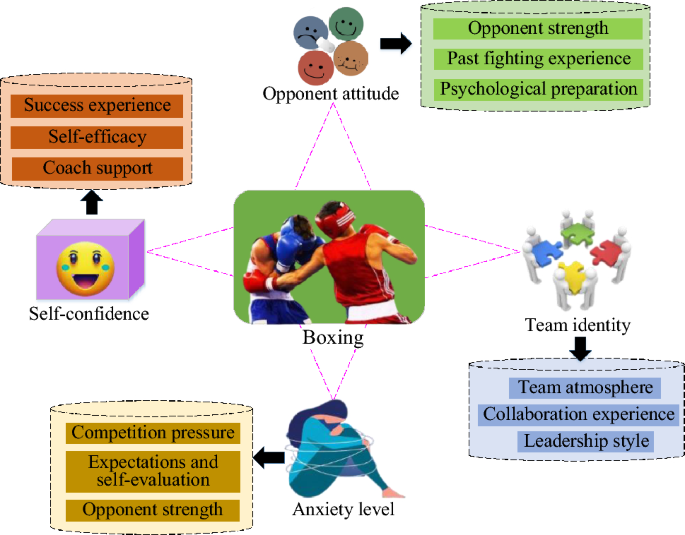

Boxing is a high-intensity sport, which requires athletes to maintain calm, focus, cope with stress and keep self-confidence within the competitors34,35. Factors comparable to nervousness stage, self-confidence, group identification and opponent’s perspective could have a major affect on the efficiency of boxers. Understanding and quantifying these psychological traits is essential for personalised coaching and bettering athletes’ aggressive stage36,37,38,39. The psychological traits and influencing elements of boxing are proven in Fig. 1.

In this research, with the intention to successfully perceive the psychological state of boxers, reference and analysis, when designing the psychological scale of boxing, it’s wanted to think about the scale and particular manifestations of varied psychological traits. Table 1 reveals the design of psychological scale, overlaying 4 dimensions: nervousness stage, self-confidence, group identification and opponent’s perspective.

In this questionnaire, the respondents are boxers, and the investigation time is from March 1, 2023 to June 1, 2023. The complete variety of questionnaires distributed was 452, of which 431 questionnaires had been recovered, with a restoration price of 95.35%, which indicated that this questionnaire was an efficient survey. Results Likert five-level scale methodology was used, and the reply choices had been divided into 5 sorts: very agree = 5, agree = 4, basic = 3, disagree = 2, and really disagree = 1. The complete questionnaire survey course of strictly follows moral ideas, and doesn’t contain private privateness. All members are over 18 years previous, and the participation course of is carried out with the consent of the members. And use SPSS 24.0 software program for statistical evaluation.

Analysis of boxing motion classification and recognition mannequin primarily based on CNN assisted by sports activities psychology

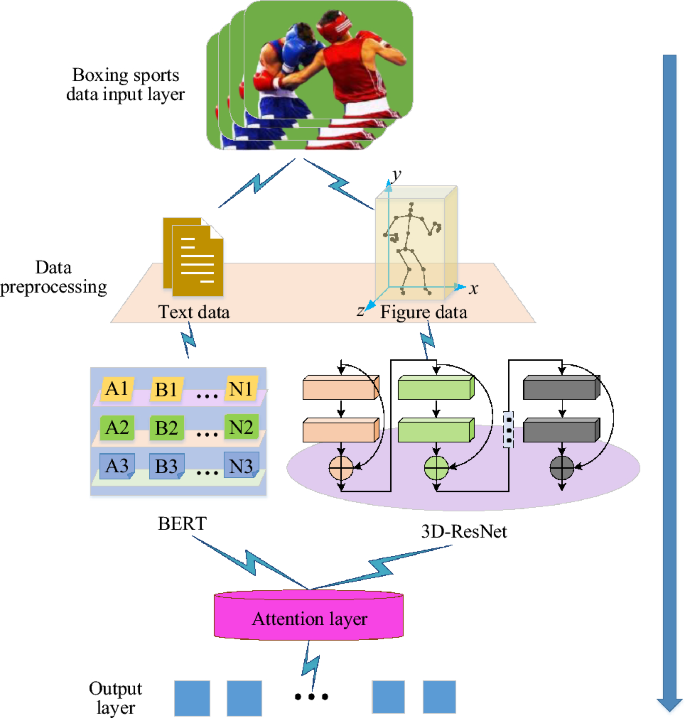

In this research, an modern boxing motion classification and recognition mannequin is proposed, aiming at combining sports activities psychology with superior deep studying know-how. In this research, an modern mannequin is proposed, which mixes the BERT algorithm to course of textual content knowledge and the 3D-RESNET community to extract the spatial–temporal options of video photographs, aiming at a extra complete understanding of athletes’ psychology and motion state. The innovation of this methodology is that it gives a brand-new analysis perspective for boxing motion classification by fusing psychological statistical textual content and video picture knowledge.

Firstly, the BERT algorithm40,41 is adopted for athletes’ psychological statistical texts, which is a pre-trained deep bidirectional transformer mannequin, specifically designed to know the complicated context of pure language texts. By pre-training large-scale textual content knowledge, BERT mannequin can seize wealthy language options and supply robust help for understanding athletes’ psychological state. In order to additional enhance the mannequin’s capability to cope with boxing-specific texts, a self-defined consideration mechanism layer is added on the premise of BERT, in order that the mannequin will pay extra consideration to the textual content info carefully associated to boxing habits, and thus seize the psychological traits of athletes extra precisely.

Secondly, for the processing of boxing video photographs, 3D-RESNET community42,43,44 is chosen. Compared with the normal 2D convolutional community, 3D-RESNET can successfully extract the spatial–temporal options in video sequences by introducing the time dimension, which is essential for understanding steady actions and habits patterns. The unique structure of 3D-RESNET is optimized, together with including a deeply separable convolution layer to scale back the complexity of the mannequin, and introducing a time consideration module to enhance the flexibility to seize the time dependence of motion sequences. These enhancements allow the community not solely to course of video knowledge extra effectively, but additionally to establish and classify complicated boxing actions extra precisely.

By fusing the textual content knowledge processed by BERT algorithm with the video picture options extracted by 3D-RESNET community, the brand new mannequin can absolutely perceive the athletes’ state from two dimensions: on the one hand, it may possibly analyze the athletes’ psychological state and technique selection from psychological texts. On the opposite hand, it captures athletes’ motion particulars and technical execution via video photographs. This multi-modal fusion methodology gives a brand new perspective for boxing motion classification and athlete state evaluation, and is anticipated to open new analysis instructions in sports activities science and human–laptop interplay.

Among them, the boxing motion classification and recognition mannequin primarily based on BERT fusion 3D-ResWeb is proven in Fig. 2.

In Fig. 2, on this mannequin, BERT algorithm is used to enter the obtained psychological statistical textual content of boxers into the mannequin based on the psychological scale and convert it right into a real-value vector illustration. In this research, an consideration layer primarily based on self-attention mechanism is launched, aiming at successfully integrating the characteristic representations from BERT (processing textual content knowledge) and ResWeb-3D (processing video sequence knowledge), and calculating the eye weight at every time step via a collection of neural community layers. The design of this layer is impressed by the Transformer structure, which dynamically adjusts the significance of characteristic illustration by calculating the eye scores between completely different enter parts.

In apply, firstly, textual content and video options are extracted from BERT and ResWeb-3D fashions, respectively. For BERT, the output of the final layer is obtained because the textual content characteristic vector. For ResWeb-3D, the output of the final layer of the community can be extracted because the video characteristic vector. Then, the 2 characteristic vectors are linked to kind a unified characteristic illustration. On this foundation, the “attention layer” is launched to behave on this unified characteristic illustration. Firstly, it calculates the significance of every aspect within the characteristic for boxing habits recognition, and this course of is realized by coaching the load matrix. Then, based on the calculated consideration rating, the characteristic vectors are weighted and summed to generate an attention-weighted characteristic illustration. This weighted characteristic illustration captures probably the most vital info in textual content and video knowledge, and gives richer and extra correct characteristic enter for subsequent classification duties. This consideration mechanism allows the mannequin to seize the important thing info associated to boxing motion in video sequences extra successfully, thus bettering the efficiency and generalization capability of the mannequin.

Assuming that the one-hot vector similar to the enter sequence x is represented as (e^{t} in R^ V proper), its phrase vector (E^{t}) may be represented by Eq. (1):

$$E^{t} = e^{t} W^{t}$$

(1)

(W^{t} in R^ occasions e) refers back to the educated phrase vector matrix. (left| V proper|) refers back to the vocabulary measurement, and e refers back to the amount dimension.

The block vector is often used to file which partition the phrase to be encoded belongs to. The block vector (E^{s}) is expressed as Eq. (2):

$$E^{s} = e^{s} W^{s}$$

(2)

(E^{s}) refers to changing block coding into real-valued vectors via block vector matrix (W^{s}). (W^{s} in R^ occasions e) refers to dam vector matrix, and (left| S proper|) refers back to the variety of blocks.

The place vector is used to encode absolutely the place of every phrase, which may be expressed as Eq. (3):

$$E^{p} = e^{p} W^{p}$$

(3)

(W^{p} in R^{N occasions e}) refers back to the place vector and N refers back to the most place size.

In this mannequin, 3D-ResWeb community is used to categorise boxing actions by figuring out video photographs. ResWeb-50 is used because the spine community. ResWeb-50 consists of an preliminary convolution layer, adopted by 16 residual blocks, every of which consists of three convolution layers, with a complete of 48 convolution layers, plus an preliminary convolution layer, and a mean pooling layer, the entire variety of layers reaches 50. This depth allows the community to seize wealthy characteristic representations, and the design of its residual connection is useful to alleviate the issue of gradient disappearance in deep community coaching.

For 3D-CNN, a community construction consisting of 4 convolution layers is designed, and every convolution layer is adopted by Batch Normalization and ReLU activation perform. After each two convolution layers, a 3D most pooling layer is used to scale back the characteristic dimension and extract extra summary options. Finally, the ultimate motion class prediction is output via a completely linked layer. (Q = left( {q_{1} ,q_{2} , cdots ,q_{T} } proper) = left{ {q_{i} } proper}_{i = 1}^{T}) is used to consult with boxing sports activities video with T frames, and sliding window is used to divide it right into a sequence (V^{N}) of N boxing video segments, as proven in Eq. (4):

$$V^{N} = left( {v_{1} ,v_{2} , cdots ,v_{N} } proper)$$

(4)

Each video section (v_{i}) is enter into 3D-ResWeb to extract its corresponding fixed-length video characteristic expression (f_{i} in R^{d}), and the enter sequence may be expressed as Eq. (5):

$$F^{N} = left( {f_{1} ,f_{2} , cdots ,f_{N} } proper) = left{ {Phi_{theta } left( {v_{i} } proper)} proper}_{i = 1}^{N}$$

(5)

(Phi_{theta } left( cdot proper)) refers to 3D-ResWeb and (theta) refers to community parameters.

In order to seize the motion info (V_{ij}^{xyz}) in a number of consecutive frames in boxing sports activities video, options are calculated from spatial and temporal dimensions. The worth of the unit whose place coordinate is (x, y, z) within the j-th characteristic map of the i-th layer, as proven in Eq. (6):

$$V_{ij}^{xyz} = fleft( {b_{ij} + sumlimits_{r} {sumlimits_{l = 0}^{{l_{i} – 1}} {sumlimits_{m = 0}^{{m_{i} – 1}} {sumlimits_{n = 0}^{{n_{i} – 1}} {w_{ijr}^{lmn} v_{{left( {i – 1} proper)r}}^{{left( {x + l} proper)left( {y + m} proper)left( {z + n} proper)}} } } } } } proper)$$

(6)

(n_{i}) refers back to the time dimension of the 3D convolution kernel. (w_{ijr}^{lmn}) refers back to the weight worth of the convolution kernel whose place (l, m, n) is linked with the r characteristic map, and (v) refers back to the motion info of every video section.

In this research, ReLU perform is used as activation perform. This perform could make the parameters of the mannequin sparse, thus lowering over-fitting. In addition, it may possibly additionally scale back the calculation of the mannequin. ReLU activation perform definition is proven as Eq. (7):

$$fleft( x proper) = max left( {0,x} proper) = left{ {start{array}{*{20}c} 0 & {x le 0} x & {x > 0} finish{array} } proper.$$

(7)

The calculation of most pooling within the mannequin is proven in Eq. (8):

$$V_{x,y,z} = mathop {max }limits_{{0 le i le s_{1} ,0 le j le s_{2} ,0 le okay le s_{3} }} left( {mu_{x occasions s + i,y occasions t + j,z occasions r + okay} } proper)$$

(8)

(mu) refers back to the three-dimensional enter vector, V refers back to the output after pooling operation, and s, t, and r consult with the sampling step measurement within the route.

For a sure enter characteristic (fleft( {v_{ti} } proper)), firstly, two convolution layers are respectively used to map (fleft( {v_{ti} } proper)) right into a Ok vector and a Q vector, as proven in Eq. (9):

$$left{ {start{array}{*{20}c} {K_{ti} = W_{Ok} fleft( {v_{ti} } proper)} {Q_{ti} = W_{Q} fleft( {v_{ti} } proper)} finish{array} } proper.$$

(9)

(W_{Ok}) and (W_{Q}) are the load matrices similar to the 2 convolution layers, the Q vector and the Ok vector of node (v_{ti}), respectively. Next, the interior product of the sum of (Q_{ti}) and (K_{ti}) is calculated, as proven in Eq. (10):

$$u_{{left( {t,i} proper) to left( {t,j} proper)}} = leftlangle {Q_{ti} ,K_{tj} } rightrangle$$

(10)

Nodes (v_{ti}) and (v_{tj}) are in the identical time step. (leftlangle , rightrangle) stands for interior product image. The interior product (u_{{left( {t,i} proper) to left( {t,j} proper)}}) known as the similarity between nodes (v_{ti}) and (v_{tj}).

In order to enhance the generalization capability of the mannequin, it’s essential to normalize the coordinate knowledge concerned within the calculation primarily based on the picture decision, as proven in Eq. (11):

$$left{ {start{array}{*{20}c} {x^{prime}_{i} = frac{{x_{i} }}{{x_{width} }}} {y^{prime}_{i} = frac{{y_{i} }}{{y_{peak} }}} {z^{prime}_{i} = frac{{z_{i} }}{{z_{lenght} }}} finish{array} } proper.$$

(11)

(x_{i} ,y_{i} ,z_{i}) are the abscissa, vertical coordinate and vertical coordinate of the important thing level i within the picture. (x_{width}), (y_{peak}) and (z_{lenght}) are the width, peak and size of a body picture. (x_{i}{prime} ,y_{i}{prime} ,z_{i}{prime}) are the normalized abscissa, vertical coordinate and vertical coordinate of the important thing level i.

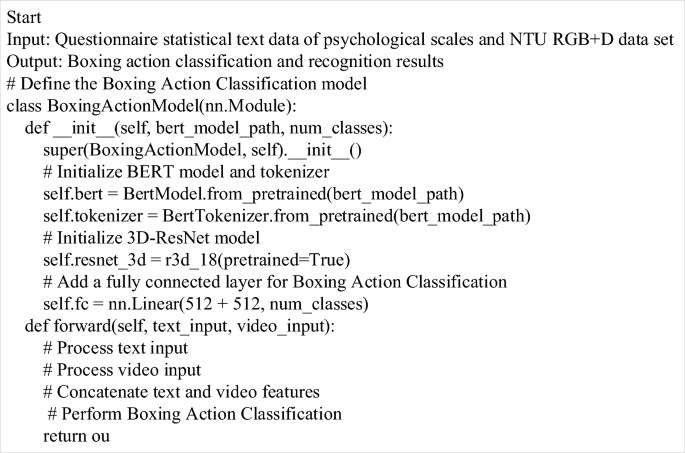

Therefore, by studying the weights of any two physique joints in numerous boxing actions, this data-driven means will increase the universality of the mannequin, in order that the mannequin can successfully establish and predict actions within the face of numerous knowledge. The pseudo code of this mannequin is proven in Fig. 3.

[adinserter block=”4″]

[ad_2]

Source link