[ad_1]

You in all probability already personal an AI PC.

In the previous few months, Intel and PC makers have beat the drum of the AI PC loudly and in live performance with AMD, Intel, and Qualcomm. It’s no secret that “AI” is the brand new “metaverse,” and executives and traders alike need to use AI to spice up gross sales and inventory costs.

And it’s true that AI does depend upon the NPUs present in chips like Intel’s Core Ultra, the model that Intel is positioning as synonymous with on-chip AI. The identical goes for AMD’s Ryzen 8000 series — which beat Intel to the desktop with an NPU — in addition to Qualcomm’s Snapdragon X Elite.

It’s simply that the built-in NPUs discovered inside the Core Ultra proper now (Meteor Lake, however with Lunar Lake ready within the wings) don’t play the outsized function in AI computation that they’re being positioned as. Instead, the extra conventional roles for computational horsepower (the CPU and particularly the GPU) contribute extra to the duty.

It’s vital to notice a number of issues: First, truly benchmarking AI efficiency is one thing completely everyone seems to be wrestling with. “AI” is comprised of quite divergent duties: picture technology, comparable to utilizing Stable Diffusion; large-language fashions (LLMs), the chatbots popularized by the (cloud-based) Microsoft Copilot and Google Bard; and a number of application-specific enhancements, comparable to AI enhancements in Adobe Premiere and Lightroom. Combine the quite a few variables in LLMs alone (frameworks, fashions, and quantization, all of which have an effect on how chatbots will run on a PC) and the tempo at which these variables fluctuate, and it’s very troublesome to choose a winner — and for greater than a second in time.

The subsequent level, although, is one which we will say with some certainty: That benchmarking works greatest if you remove as many variables as attainable. And that’s what we will do with one small piece of the puzzle: How a lot Intel’s CPU, GPU, and NPU contribute to AI calculations carried out by Intel’s Core Ultra chip, Meteor Lake.

Mark Hachman / IDG

The NPU isn’t the engine of on-chip AI proper now. The GPU is

We’re not making an attempt to determine how effectively Meteor Lake performs in AI. But what we will do is carry out a actuality verify on how a lot the NPU issues in AI.

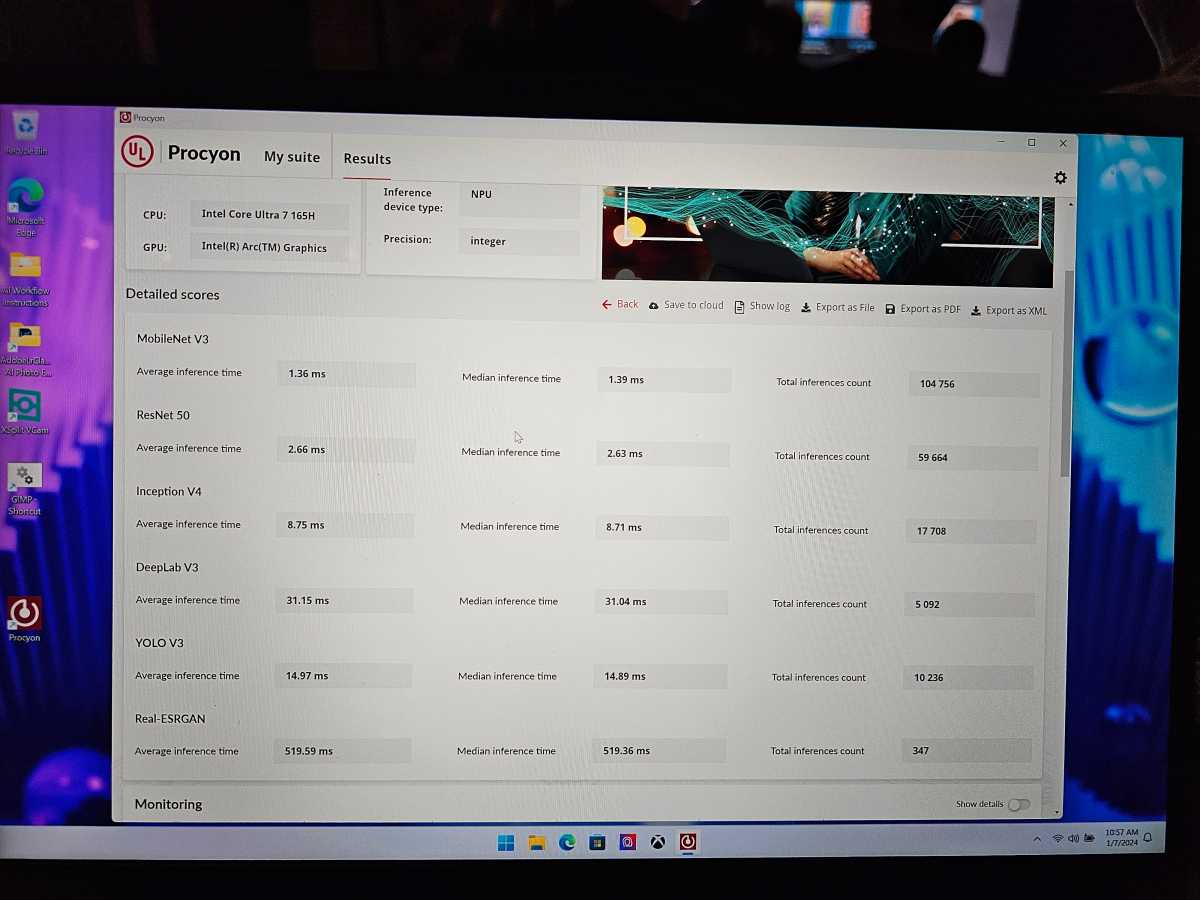

The particular take a look at we’re utilizing is UL’s Procyon AI inferencing benchmark, which calculates how successfully a processor runs when dealing with numerous massive language fashions. Specifically, it permits the tester to interrupt it down, evaluating the CPU, GPU, and NPU.

In this case, we examined the Core Ultra 7 165H inside an MSI laptop computer, offered for testing throughout an Intel benchmarking day at CES 2024. (Much of my time was spent interviewing Dan Rogers of Intel, however I used to be capable of get some exams in.) Procyon runs the LLMs on prime of the processor and calculates a rating, primarily based upon efficiency, latency, and so forth.

Without ado, listed below are the numbers:

- Procyon (OpenVINO) NPU: 356

- Procyon (OpenVINO) GPU: 552

- Procyon (OpenVINO): CPU: 196

The Procyon exams proved a number of factors: First, the NPU does make a distinction; in comparison with the efficiency and effectivity cores elsewhere within the CPU, the NPU outperforms it by 82 %, all by itself. But the GPU’s AI efficiency is 182 % of the CPU, and outperforms the NPU by 55 %.

Mark Hachman / IDG

Put one other means: If you worth AI, purchase a big, beefy graphics card or GPU first.

But the second level is much less apparent: Yes, you can run AI functions on a CPU or GPU, with none want for a devoted AI logic block. All the Procyon exams display is that some blocks are simpler than others.

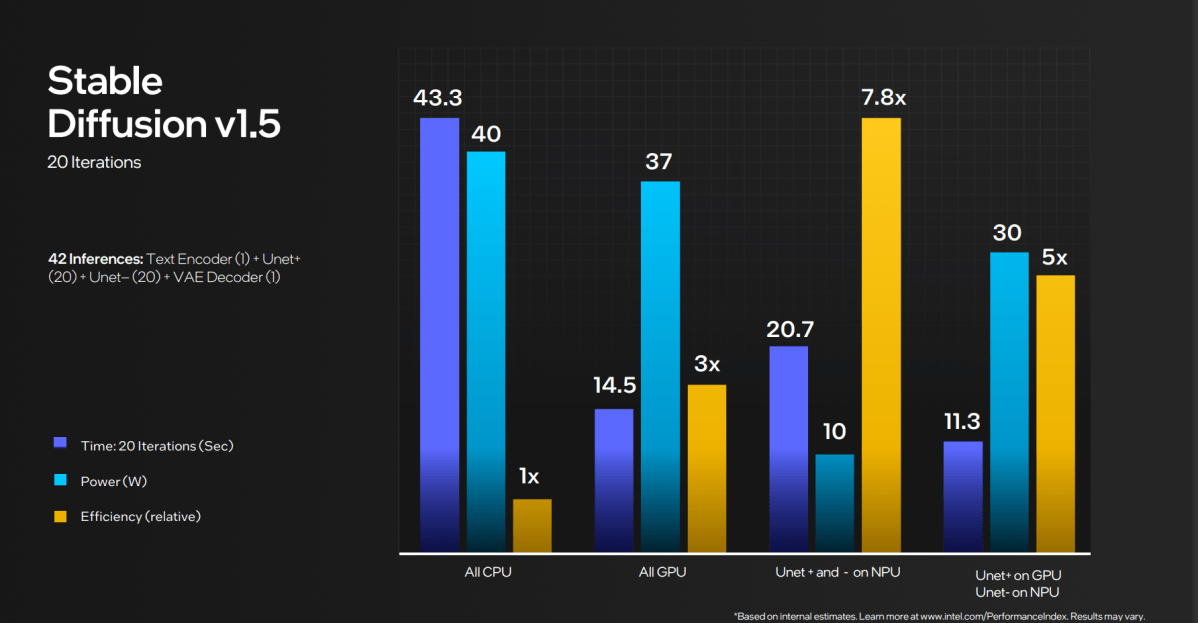

What Intel is saying (and, to be honest, has been saying) is that the NPU is extra environment friendly. In the actual world, “efficiency” is chipmaker code for “long battery life.” At the identical time, Intel has tried to emphasise that the CPU, GPU, and NPU can work collectively.

Intel

In this case, the NPU’s effectivity equates to AI functions that function over time, and possibly on battery. And the very best instance of that may be a prolonged Microsoft Teams name from the depths of a lodge room or convention middle (similar to CES!) the place AI is getting used to filter out noise and background exercise.

Typically, AI artwork functions like Stable Diffusion launched first as a method to generate native AI artwork utilizing the facility of your GPU, alongside a ton of obtainable VRAM. But over time AI functions have developed to run on much less highly effective configurations, together with totally on the CPU. This is a well-recognized metaphor; you’re not going to run a graphics-intensive sport like Crysis effectively on built-in {hardware}, but it surely ought to run — simply very, very slowly. AI LLMs / chatbots will do the identical, “thinking” for a very long time about their responses after which “typing” them out very slowly. LLMs that may run on a GPU will carry out higher, and cloud-based options will likely be a lot quicker.

However, AI will evolve

It’s fascinating, too, that (as of this writing) UL’s Procyon app acknowledges the CPU and the GPU within the AMD Ryzen AI-powered Ryzen 7040, however not the NPU. We’re within the very early days of AI, when not even the essential capabilities of the chips themselves are acknowledged by the functions which might be designed to make use of them. This simply complicates testing even additional.

The level is, nevertheless, that you simply don’t want an NPU to run AI in your PC, particularly if you have already got a gaming laptop computer or desktop. NPUs from AMD, Intel, and Qualcomm will likely be good to have, however they’re not must-haves, both.

Mark Hachman / IDG

It received’t at all times be this manner, although. Intel’s promising that the NPU within the upcoming Lunar Lake chip due on the finish of this yr may have 3 times the NPU efficiency. It’s not saying something in regards to the CPU or the GPU efficiency. It’s very attainable that, over time, the NPU’s efficiency in numerous PC chips will develop in order that their AI efficiency will turn out to be massively disproportionate in comparison with the opposite elements of the chip. And if not, a slew of AI accelerator chip startups have plans to turn out to be the 3Dfx’s of the AI world.

For now, although, we’re right here to take a deep breath as 2024 begins. New AI PCs matter, as do the brand new NPUs. But customers in all probability received’t care as a lot as chipmakers that AI is working on their PC, versus the cloud, regardless of how loud the hype is. And for people who do care, the NPU is only one piece of the general resolution.

[adinserter block=”4″]

[ad_2]

Source link