[ad_1]

Tom Hanks didn’t simply name me to pitch me a component, however it positive sounds prefer it.

Ever since PCWorld started masking the rise of various AI applications like AI art, I’ve been poking round within the code repositories in GitHub and hyperlinks inside Reddit, the place folks will put up tweaks to their very own AI fashions for varied approaches.

Some of those fashions truly find yourself on business websites, which both roll their very own algorithms or adapt others which have printed as open supply. An important instance of an current AI audio website is Uberduck.ai, which gives actually lots of of preprogrammed fashions. Enter the textual content within the textual content subject and you may have a digital Elon Musk, Bill Gates, Peggy Hill, Daffy Duck, Alex Trebek, Beavis, The Joker, and even Siri learn out your pre-programmed strains.

We uploaded a faux Bill Clinton praising PCWorld final yr and the mannequin already sounds fairly good.

Training an AI to breed speech entails importing clear voice samples. The AI “learns” how the speaker combines sounds with the aim into studying these relationships, perfecting them, and imitating the outcomes. If you’re acquainted with the wonderful 1992 thriller Sneakers (with an all-star solid of Robert Redford, Sidney Poitier, and Ben Kingsley, amongst others), then in regards to the scene through which the characters must “crack” a biometric voice password by recording a voice pattern of the goal’s voice. This is nearly the very same factor.

Normally, assembling a superb voice mannequin can take fairly a bit of coaching, with prolonged samples to point how a specific particular person speaks. In the previous few days, nevertheless, one thing new has emerged: Microsoft Vall-E, a research paper (with dwell examples) of a synthesized voice that requires only a few seconds of supply audio to generate a totally programmable voice.

Naturally, AI researchers and different AI groupies needed to know if the Vall-E mannequin had been launched to the general public but. The reply is not any, although you possibly can play with one other mannequin if you want, known as Tortoise. (The writer notes that it’s known as Tortoise as a result of it’s gradual, which it’s, however it works.)

Train your individual AI voice with Tortoise

What makes Tortoise fascinating is that you could prepare the mannequin on no matter voice you select just by importing just a few audio clips. The Tortoise GitHub page notes that you must have just a few clips of a couple of dozen seconds or so. You’ll want to avoid wasting them as a .WAV file with a selected high quality.

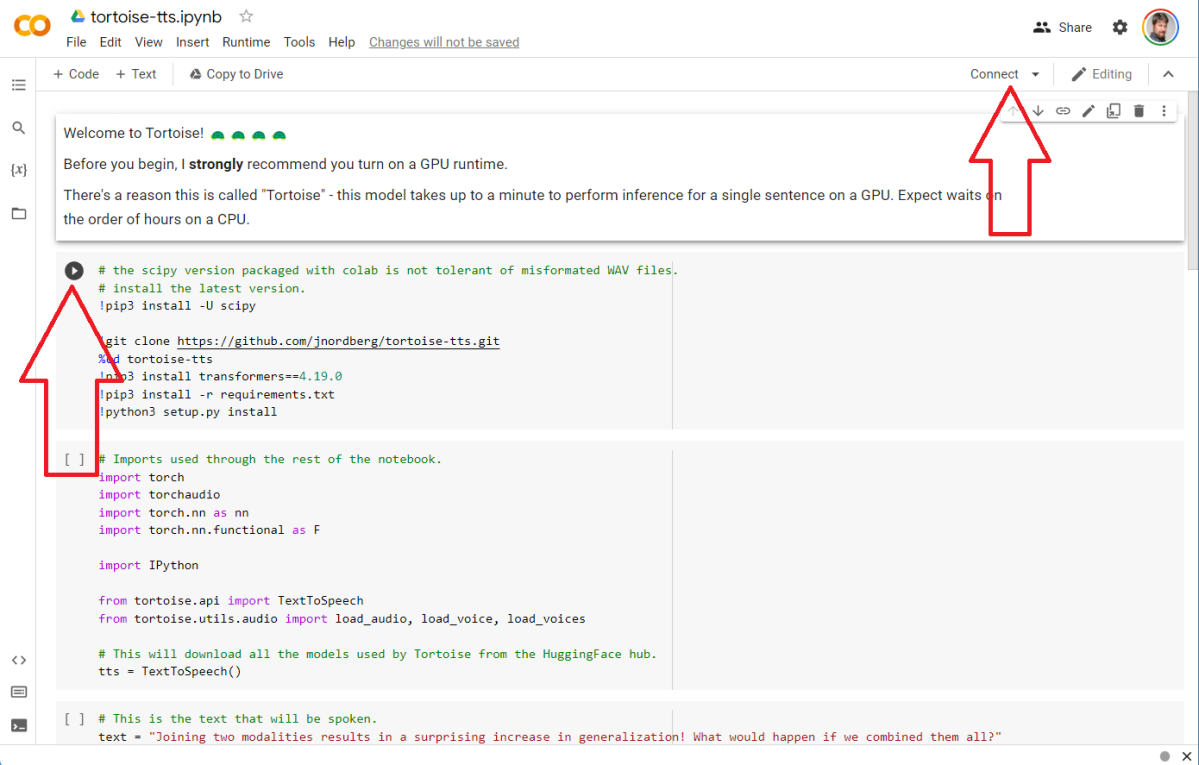

How does all of it work? Through a public utility that you just won’t pay attention to: Google Colab. Essentially, Collab is a cloud service that Google supplies that permits entry to a Python server. The code that you just (or another person) writes might be saved as a pocket book, which might be shared with customers who’ve a generic Google account. The Tortoise shared resource is here.

The interface appears intimidating, however it’s not that dangerous. You’ll should be logged in as a Google person and you then’ll must click on “Connect” within the upper-right-hand nook. A phrase of warning. While this Colab doesn’t obtain something to your Google Drive, different Colabs would possibly. (The audio recordsdata this generates, although, are saved within the browser however might be downloaded to your PC.) Be conscious that you just’re operating code that another person has written. You could obtain error messages both due to dangerous inputs or as a result of Google has a hiccup on the again finish corresponding to not having an accessible GPU. It’s all a bit experimental.

Each block of code has a small “play” icon that seems in case you hover your mouse over it. You’ll must click on “play” on every block of code to run it, ready for every block to execute earlier than you run the following.

While we’re not going to step by detailed directions on all the options, simply bear in mind that the pink textual content is person modifiable, such because the instructed textual content that you really want the mannequin to talk. About seven blocks down, you’ll have the choice of coaching the mannequin. You’ll want to call the mannequin, then add the audio recordsdata. When that completes, choose the brand new audio mannequin within the fourth block, run the code, then configure the textual content within the third block. Run that code block.

If every part goes as deliberate, you’ll have a small audio output of your pattern voice. Does it work? Well, I did a quick-and-dirty voice mannequin of my colleague Gordon Mah Ung, whose work seems on our The Full Nerd podcast in addition to varied movies. I uploaded a several-minute pattern moderately than the quick snippets, simply to see if it will work.

The end result? Well, it sounds lifelike, however not like Gordon in any respect. He’s actually protected from digital impersonation for now. (This shouldn’t be an endorsement of any fast-food chain, both.)

But an current mannequin that the Tortoise writer skilled on actor Tom Hanks sounds fairly good. This shouldn’t be Tom Hanks talking right here! Tom additionally did not provide me a job, however it was sufficient to idiot no less than considered one of my buddies.

The conclusion? It’s a little bit scary: the age of believing what we hear (and shortly see) is ending. Or it already has.

[adinserter block=”4″]

[ad_2]

Source link