[ad_1]

AMD introduced the Ryzen 8040 sequence of laptop computer processors on the firm’s AI-themed occasion, reframing what has been a dialog about CPU pace, energy, and battery life into one which prioritizes AI.

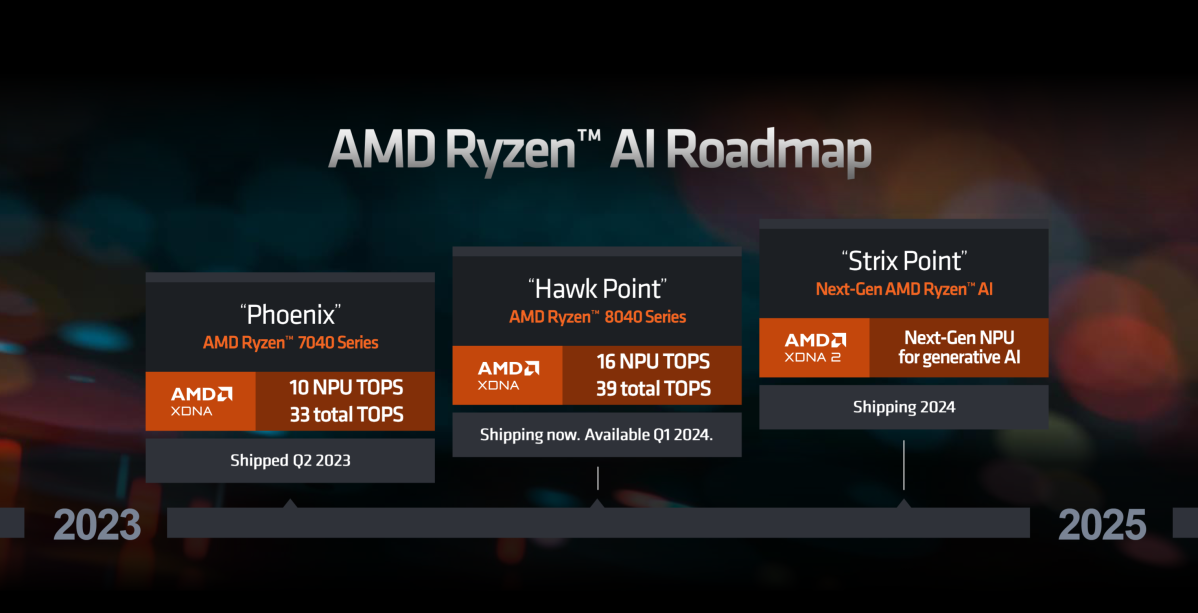

In January, AMD launched the Ryzen 7000 family, of which the Ryzen 7040 included the primary use of what AMD then referred to as its XDNA structure, powering Ryzen AI. (When rival Intel disclosed its Meteor Lake processor this previous summer season, Intel started referring to the AI accelerator as an NPU, and the title caught.)

More than 50 laptop computer fashions already ship with Ryzen AI, executives mentioned. Now, it’s on to AMD’s subsequent NPU, Hawk Point, contained in the Ryzen 8040. In AMD’s case, the XDNA NPU assists the Zen CPU, with the Radeon RDNA structure of the GPU powering graphics. But all three logic elements work harmoniously, contributing to the better entire.

“We view AI as the single most transformational technology of the last ten years,” mentioned Dr. Lisa Su, AMD’s chief government, in kicking off AMD’s “Advancing AI” presentation on Wednesday.

Now, the battle is being waged throughout a number of fronts. While Microsoft and Google might want AI to be computed within the cloud, all of the heavyweight chip firms are making a case for it to be processed domestically, on the PC. That means discovering functions that may benefit from the native AI processing capabilities. And that means partnering with software program builders to code apps for particular processors. The upshot is that AMD and its rivals should present software program instruments to allow these functions to speak to their chips.

Naturally, AMD, Intel, and Qualcomm need these apps to run most successfully on their very own silicon, so the chip firms should compete on two separate tracks: Not solely have they got to supply probably the most highly effective AI silicon, they have to additionally guarantee app builders can code to their chips in probably the most environment friendly method potential.

AMD

Silicon makers have tried to entice recreation builders to do the identical for years. And although AI can appear impenetrable from the skin, you possibly can tease out acquainted ideas: quantization, for instance, may be seen as a type of information compression to permit large-language fashions that usually run on highly effective server processors to cut back their complexity and run on native processors just like the Ryzen 8000 sequence. Those sort of instruments are essential for “local AI” to succeed.

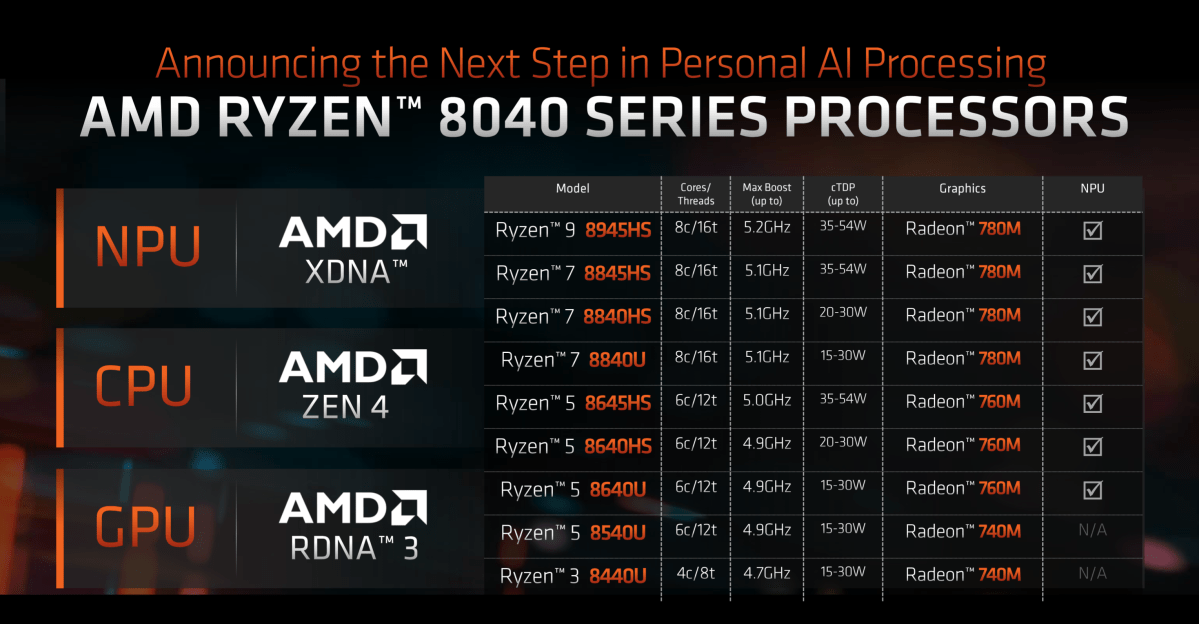

Meet the eight Ryzen 8040 cell processors

Like many chips, AMD is asserting the Ryzen 8040 sequence now, however you’ll see them in laptops starting subsequent 12 months.

The Ryzen 8040 sequence combines AMD’s Zen 4 structure, its RDNA 3 GPUs, and (nonetheless) the first-gen XDNA structure collectively. But the brand new chips really use AMD’s second NPU. The first, “Phoenix,” included 10 NPU trillions of operations per second (TOPS) and 33 complete TOPS—with the rest coming from the CPU and the GPU. The 8040 sequence consists of “Hawk Point,” which will increase the NPU TOPS to 16 TOPS with 39 TOPS in complete.

Donny Woligroski, senior cell processor technical advertising supervisor, informed reporters that the CPU makes use of AVX-512 VNNI directions to run light-weight AI features on the CPU. AI may run on the GPU, however at a excessive, inefficient energy stage—a stance we’ve heard from Intel, too.

“When it comes to efficiency, raw performance isn’t enough,” Woligroski mentioned. “You’ve got to able to run this stuff in a laptop.”

There are 9 members of the Ryzen 8040 sequence. The seven strongest embody the Hawk Point NPU. They differ from 8 cores/16 threads and a lift clock of 5.2GHz on the excessive finish, right down to 4 cores/8 threads and 4.7GHz. TDPs vary from a minimal of 15W to a 35W processor on the excessive finish, stretching to 54W.

AMD

The 9 new chips embody:

- Ryzen 9 8945HS: 8 cores/16 threads, 5.2GHz (increase); Radeon 780M graphics, 35-54W

- Ryzen 7 8845HS: 8 cores/16 threads, 5.1GHz (increase); Radeon 780M graphics, 35-54W

- Ryzen 7 8840HS: 8 cores/16 threads, 5.1GHz (increase) Radeon 780M graphics, 20-30W

- Ryzen 7 8840U: 8 cores/16 threads, 5.1GHz (increase) Radeon 780M graphics, 15-30W

- Ryzen 5 8645HS: 6 cores/12 threads, 5.0GHz (increase) Radeon 760M graphics, 35-54W

- Ryzen 5 8640HS: 6 cores/12 threads, 4.9GHz (increase) Radeon 760M graphics, 20-30W

- Ryzen 5 8640U: 6 cores/12 threads, 5.1GHz (increase) Radeon 760M graphics, 20-30W

- Ryzen 5 8540U: 6 cores/12 threads, 4.9GHz (increase) Radeon 740M graphics, 15-30W

- Ryzen 3 8440U: 4 cores/8 threads, 4.7GHz (increase) Radeon 740M graphics, 15-30W

Using AMD’s model number “decoder ring” — which Intel recently slammed as “snake oil” — all the new processors use the Zen 4 structure and can ship in laptops in 2024. AMD provides three built-in GPUs—the 780M (12 cores, as much as 2.7GHz), the 760M (8 cores, as much as 2.6GHz) and the 740M (4 cores, as much as 2.5GHz)—based mostly upon the RDNA3 graphics structure and DDR5/LPDDR5 help. Those iGPUs appeared earlier within the Ryzen 7040 mobile chips that debuted earlier this 12 months with the Phoenix NPU.

Interestingly, AMD isn’t asserting any “HX” elements for premium gaming, at the very least not but.

AMD can be disclosing a third-gen NPU, “Strix Point,” which it can ship someday later in 2024, presumably inside in a next-gen Ryzen processor. AMD didn’t disclose any of the precise Strix Point specs, however mentioned that it’s going to ship greater than thrice the generative AI efficiency of the prior technology.

AMD

Ryzen 8040 efficiency

AMD included some generic benchmark evaluations evaluating the 8940H to the Intel Core i9-13900H at 1080p on low settings, claiming its personal chip beats Intel’s by 1.8X. (AMD used 9 video games for a comparability, probably not claiming the way it arrived on the numbers.) AMD claims a 1.4X efficiency increase on the identical chips, by some means amalgamating Cinebench R23 and Geekbench 6.

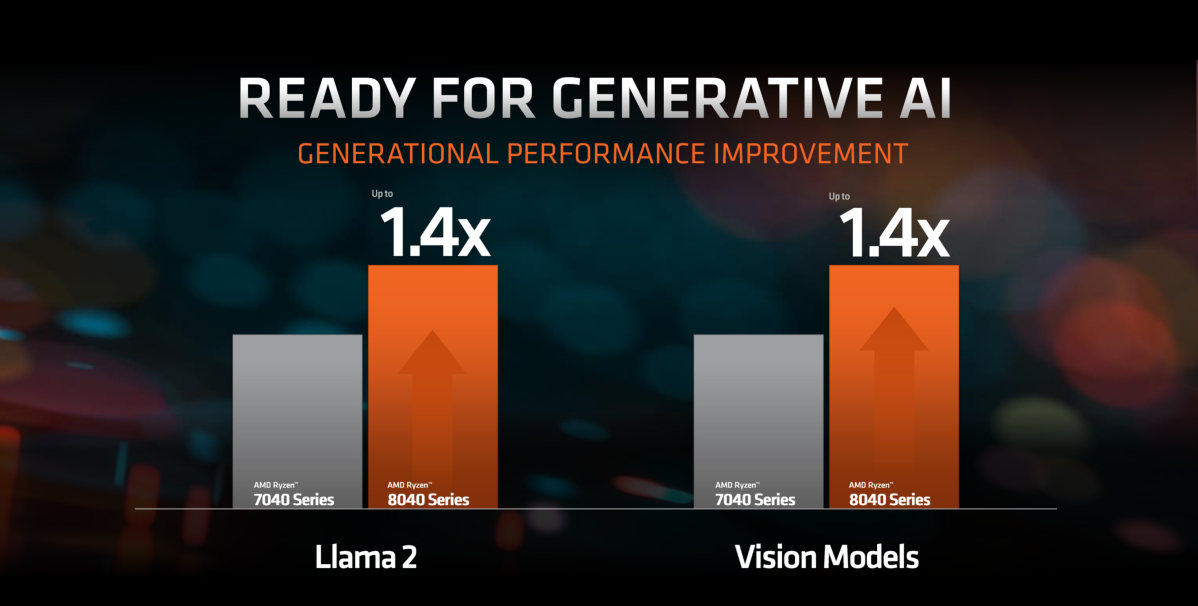

AMD additionally claimed a gen-over-gen AI enchancment of 1.4X on Facebook’s Llama 2 massive language mannequin and “vision models,” evaluating the 7940HS and 8840HS.

AMD

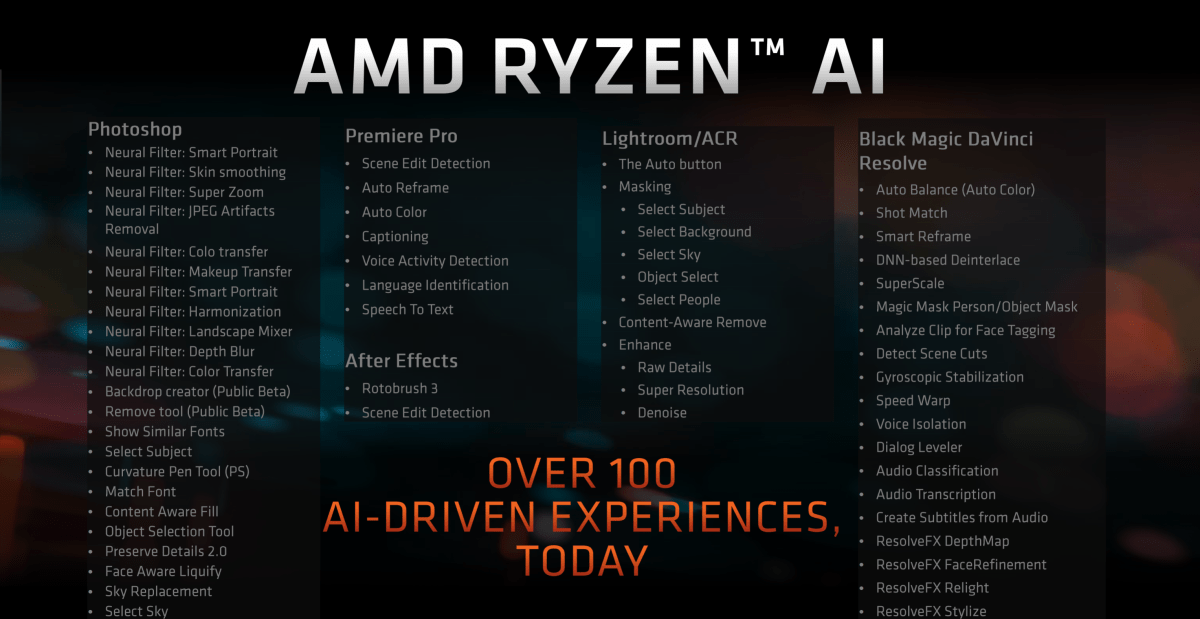

With AI in its infancy, silicon producers don’t have many factors of comparability for conventional benchmarks. AMD executives highlighted localized AI-powered experiences, comparable to the assorted neural filters discovered inside Photoshop, the masking instruments inside Lightroom, and plenty of instruments inside BlackMagic’s DaVinci Resolve. The underlying message is that this is the reason you want native AI, reasonably than working it within the cloud.

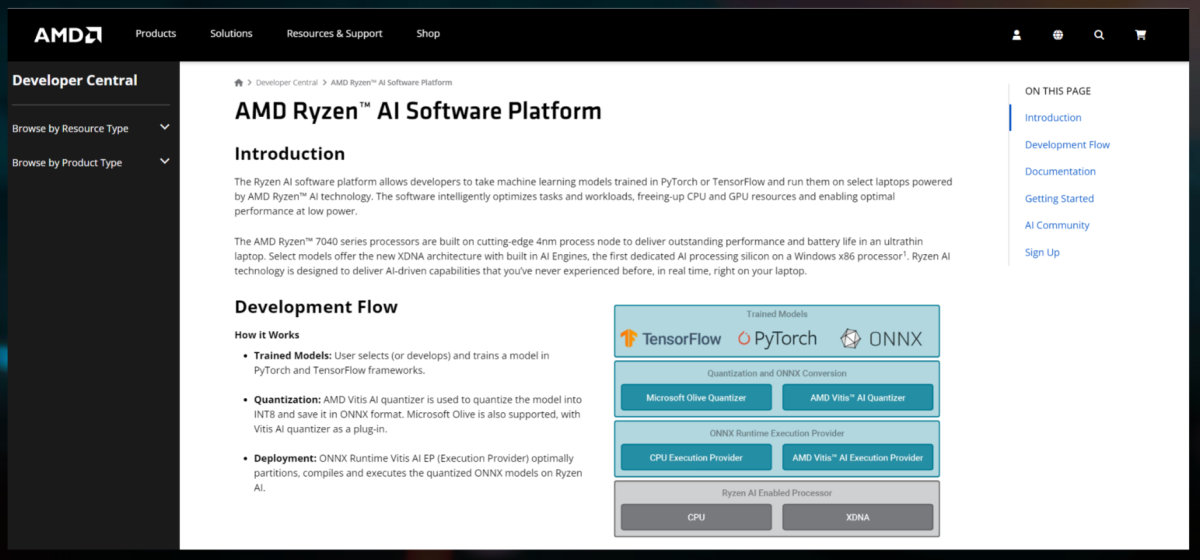

AMD can be debuting AMD Ryzen AI Software, a device to permit a mannequin developed in PyTorch or TensorFlow for workstations and servers to run on a neighborhood AI-enabled Ryzen chip.

The drawback with working a very massive large-language mannequin like a neighborhood chatbot, voice-changing software program, or another mannequin is that servers and workstations have extra highly effective processors and extra obtainable reminiscence. Laptops don’t. What Ryzen AI Software is designed to do is to take the LLM and primarily transcode it into an easier, much less intensive model that may be run on the extra restricted reminiscence and processing energy of a Ryzen laptop computer.

Put one other means, the majority of what you consider as a chatbot, or LLM, is definitely the “weights,” or parameters — the relationships between varied ideas and phrases. LLMs like GPT-3 have billions of parameters, and storing and executing these (inferencing) takes huge computing assets. Quantization is a bit like picture compression, decreasing the scale of the weights hopefully with out ruining the “intelligence” of the mannequin.

AMD and Microsoft use ONNX for this, an open-source runtime with built-in optimizations and easy startup scripts. What Ryzen AI Software would do could be to permit this quantization to occur mechanically, saving the mannequin into an ONNX format that is able to run on a Ryzen chip.

AMD

Executives mentioned that this Ryzen AI Software device will likely be launched in the present day, giving each impartial builders and fanatics a better strategy to check out AI themselves.

AMD can be launching the AMD Pervasive AI Contest, with prizes for improvement for robotics AI, generative AI, and PC AI. Prizes for PC AI, which entails creating distinctive apps for speech or imaginative and prescient, start at $3,000 and climb to $10,000.

All this helps propel AMD in what continues to be early within the race to determine AI, particularly within the shopper PC. Next 12 months guarantees to solidify the place every chip firm suits as they spherical the primary flip.

[adinserter block=”4″]

[ad_2]

Source link