[ad_1]

Last week, Google launched its new AI, or quite its new large language mannequin, dubbed Gemini. The Gemini 1.0 mannequin is accessible in three variations: Gemini Nano is meant to be finest fitted to duties on a particular gadget, Gemini Pro is meant to be the best choice for a wider vary of duties, and Gemini Ultra is Google’s largest language mannequin that may deal with probably the most advanced duties you may give it.

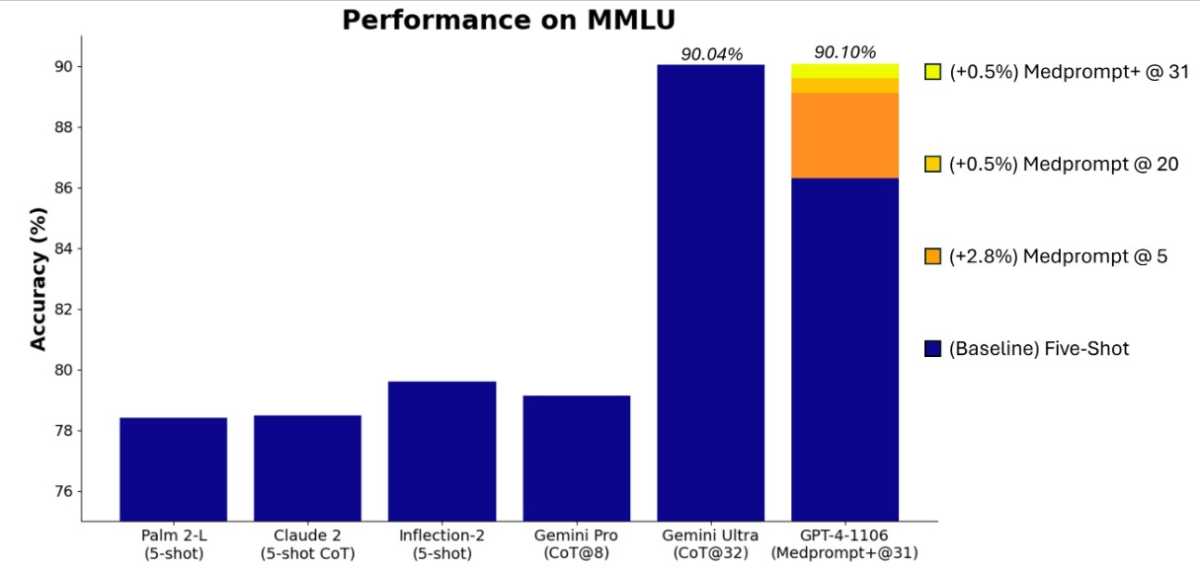

Something that Google was eager to spotlight on the launch of Gemini Ultra was that the language mannequin outperformed the most recent model of OpenAI’s GPT-4 in 30 of the 32 mostly used checks to measure the capabilities of language fashions. The checks cowl the whole lot from studying comprehension and numerous math inquiries to writing code for Python and picture evaluation. In among the checks, the distinction between the 2 AI fashions was just a few tenths of a proportion level, whereas in others it was as much as ten proportion factors.

Perhaps Gemini Ultra’s most spectacular achievement, nonetheless, is that it’s the first language mannequin to beat human consultants in large multitask language understanding (MMLU) checks, the place Gemini Ultra and consultants have been confronted with problem-solving duties in 57 completely different fields, starting from math and physics to medication, legislation, and ethics. Gemini Ultra managed to realize a rating of 90.0 %, whereas the human professional it was in comparison with “only” scored 89.8 %.

The launch of Gemini might be gradual. Last week, Gemini Pro grew to become obtainable to the general public, as Google’s chatbot Bard began utilizing a modified model of the language mannequin, and Gemini Nano is constructed into a lot of completely different capabilities on Google’s Pixel 8 Pro smartphone. Gemini Ultra isn’t prepared for the general public but. Google says it’s nonetheless present process safety testing and is barely being shared with a handful of builders and companions, in addition to consultants in AI legal responsibility and safety. However, the concept is to make Gemini Ultra obtainable to the general public by way of Bard Advanced when it launches early subsequent yr.

Microsoft has now countered Google’s claims that Gemini Ultra can beat GPT-4 by having GPT-4 run the identical checks once more, however this time with barely modified prompts or inputs. Microsoft researchers printed analysis in November on one thing they known as Medprompt, a mixture of completely different methods for feeding prompts into the language mannequin to get higher outcomes. You could have seen how the solutions you get out of ChatGPT or the pictures you get out of Bing’s picture creator are barely completely different while you change the wording a bit. That idea, however way more superior, is the concept behind Medprompt.

Microsoft

By utilizing Medprompt, Microsoft managed to make GPT-4 carry out higher than Gemini Ultra on a lot of the 30 checks Google beforehand highlighted, together with the MMLU check, the place GPT-4 with Medprompt inputs managed to get a rating of 90.10 per cent. Which language mannequin will dominate sooner or later stays to be seen. The battle for the AI throne is much from over.

This article was translated from Swedish to English and initially appeared on pcforalla.se.

[adinserter block=”4″]

[ad_2]

Source link