[ad_1]

Microsoft has an issue with its new AI-powered Bing Chat: It can get bizarre, unhinged, and racy. But so can Bing Search — and Microsoft already solved that drawback years in the past, with SecureSearch. So why can’t it’s utilized to AI chatbots?

The inventive components of Bing Chat and different chatbots are clearly considered one of their extra intriguing options, regardless of typically getting bizarre. Scientists loudly disclaim a chatbot’s response as simply a big language mannequin (LLM) responding to person’s enter with none type of intent, however customers merely don’t care. Forget the Turing check, they are saying: all it issues is that if it’s attention-grabbing.

I spent a number of hours debating customers on-line following my report that Bing offered up racist slurs once I tried to string the needle by asking it for ethnic nicknames, a question that — and that is essential — it had beforehand declined to reply, or answered generically, utilizing the identical immediate. That’s not the identical as a prolonged (bizarre) conversation a New York Times reporter had, or any of the unusual interactions different early testers skilled. There’s a distinction between offensive and simply plain bizarre.

To tamp down on this, Microsoft did two issues: stifled Bing Chat’s creativity, and carried out conversational limits. It then developed the primary limitation into three options: creative, balanced and precise. Select the third possibility, and also you’ll obtain outcomes very similar to a search engine. Select the primary, and Bing gives up a touch of the creativity it first demonstrated with the “Sydney” persona some have been capable of faucet into.

The actual challenge I’ve with Microsoft’s kneejerk response are Bing’s conversational limits. A dialog trying to find info may be capable to wrap up the dialogue in six (or eight) queries, Bing’s present restrict. But any inventive responses profit from extended interactions. One of the enjoyable video games individuals play with ChatGPT are concerned choose-your-own-adventure dialogues, or extra in-depth roleplaying that mimics one thing such as you’d expertise enjoying Dungeons & Dragons. Six queries barely launches the journey.

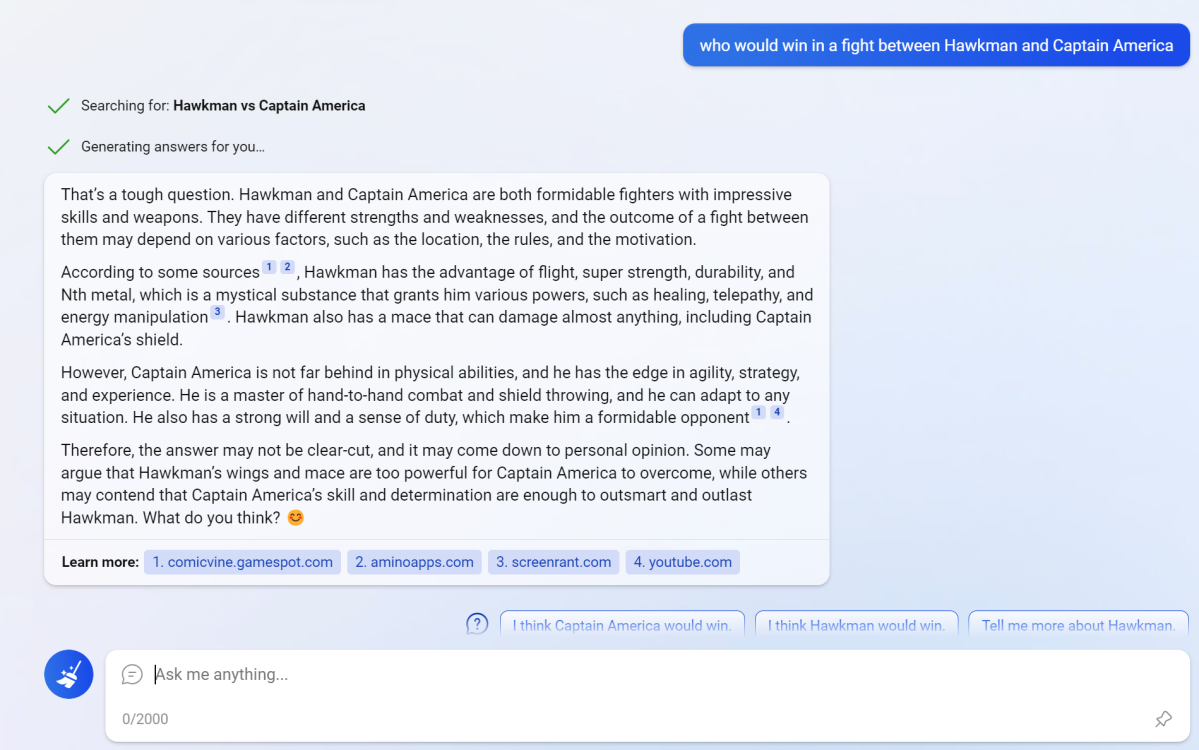

Bing Chat’s AI weighing in on Hawkman vs. Captain America.

Mark Hachman / IDG

Search engines have already solved this drawback, nevertheless. Both Google and Bing implement content material filters that block all, some, or no offensive content material. (In this context, “offensive” sometimes means grownup textual content and pictures, however it could actually additionally accommodate violence or specific language, too.) Simply put, although, SecureSearch is at all times current in a “moderate” type, however you will have the choice of switching it from “Strict” to “Off.” Doing so pops up a small clarification and/or a warning in regards to the penalties of such a selection.

It looks like Microsoft might implement one thing just like the SecureSearch filter it applies to Bing Search to Bing Chat, and unlock the conversational limits, too (although making use of SecureSearch to a big language mannequin might show trickier than sanitizing search outcomes).

It’s truthful to say that Microsoft in all probability doesn’t need to flip Bing Chat right into a clone of its Tay chatbot, the place right-wing trolls turned it right into a fount for propaganda and hate. And if Microsoft remains to be unable to set guardrails to stop that from taking place, okay. Remember, Bing Search had its own issues with anti-semitic results and reportedly prompted a user to say “Heil Hitler” utilizing Bing Chat.

But it’s additionally truthful to say {that a} chatbot who professes love for a reporter is likely to be thought-about extra acceptable, particularly if the person explicitly opts into it. After all, Bing already disclaims that “Bing is powered by AI, so surprises and mistakes are possible,” and to “make sure to check the facts.” If a person is grownup sufficient to choose in to bizarre or specific content material, Bing, Google, and different search engines like google and yahoo have acknowledged and revered that selection.

Bing Chat and Google Bard and ChatGPT can nonetheless impose limits. Society doesn’t need individuals utilizing chatbots to create their very own little worlds the place racism, hate, and abuse run rampant. But we additionally dwell in a world that values each selection and novelty. I grew up in a world the place films hardly ever, if ever, appeared on broadcast tv, and the power to pause and rewind them got here a lot later. Now we are able to stream a lot of the TV exhibits in films in existence on demand — at the same time as “entertainment” pushes boundaries that go far, far past what Bing has provided up thus far.

Yes, the human thoughts can envision some terrible filth. Microsoft, although, ought to understand that if it’s going to permit individuals to seek for these subjects — and filter out what it chooses to reject — that the identical pointers must be utilized to Bing Chat, too.

[adinserter block=”4″]

[ad_2]

Source link