[ad_1]

This research aimed to construct a sports activities betting database to determine anomalies and detect match-fixing by means of betting odds knowledge. The database comprises knowledge on sports activities groups, match outcomes, and betting odds. A match-fixing detection mannequin was created primarily based on the database.

Sport database

The database was constructed on world soccer league match betting knowledge of 12 betting corporations (188bet, Interwetten, Vcbet, 12bet, Willhill, Macauslot, Sbobet, Wewbet, Mansion88, Easybet, Bet365, and Crown), utilizing historic database documentation of iSports API. The latter offers an unlimited assortment of knowledge on gamers, groups, sport schedules, and league rankings for each sports activities league, together with soccer, basketball, baseball, hockey, and tennis. This research constructed a database utilizing knowledge on soccer matches. As proven in Table 1, 31 forms of knowledge have been collected. iSports API is a sports activities knowledge firm that gives software programming interfaces (APIs) for accessing and integrating sports activities knowledge into varied platforms and functions. The API collects knowledge from a number of sources utilizing a mix of automated net scraping expertise, knowledge feeds, and partnerships with sports activities knowledge suppliers. To extract knowledge, net scraping strategies are utilized on sports activities web sites, together with official league and group websites, information platforms, and sports activities statistics portals. Once gathered, the information are aggregated and offered in a constant and structured format. This includes standardizing knowledge fields, normalizing knowledge codecs, and merging info from completely different sources to create complete and unified datasets. Furthermore, high quality assurance measures are employed by iSports API to make sure the accuracy and reliability of the collected knowledge, enhancing its general reliability. The knowledge collected by iSports API comprise match betting knowledge from varied world soccer leagues, protecting the interval from 2000 to 2020, together with knowledge from leagues, such because the Ok-League, Premier League, and Primera Liga. The dataset comprises odds for dwelling matches, away matches, and ties, that are recorded at minute intervals all through every match.

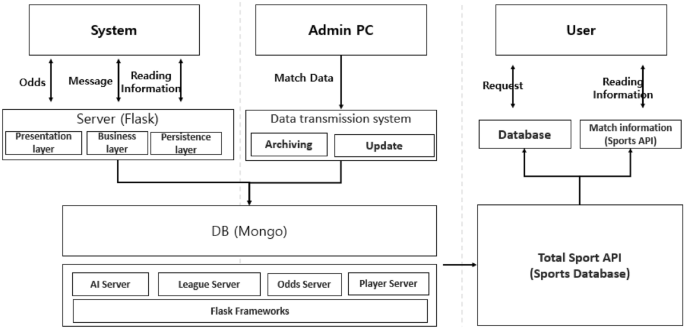

The variables in Table 1 represent the database, as proven in Fig. 1. The Flask server is out there for customers to request knowledge on betting odds, person messages, and matches. The Admin PC always updates match knowledge and shops them within the database. Database constructing passed off in Mongo DB, offering the next servers: Sport Server on matches and climate; League Server on league and cup profiles, league rating, and occasions throughout matches; Odds Server on betting odds of various classes in addition to on betting firm website; and Player Server on participant’s efficiency, profile, and different info. The database, illustrated in Fig. 1, repeatedly collects soccer match knowledge, primarily based on 31 variables that have an effect on the result of the sport. This permits us to evaluate whether or not the derived match odds exhibit a traditional or irregular sample, primarily based on varied elements. The database additionally allows the comparability of real-time knowledge on 31 variables and odds, thereby enabling the identification of irregular video games—each in actual time and retrospectively.

Betting fashions

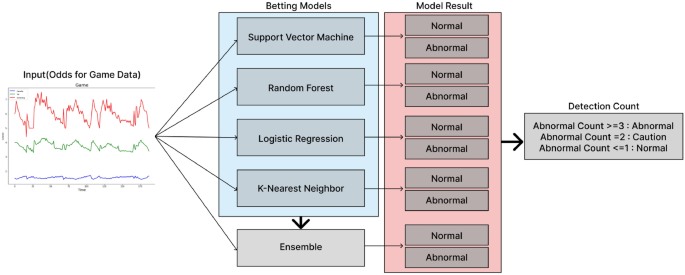

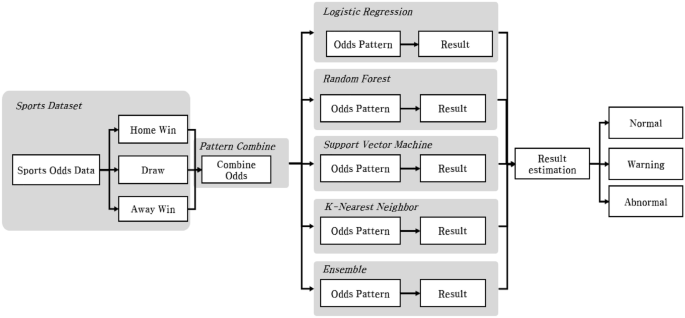

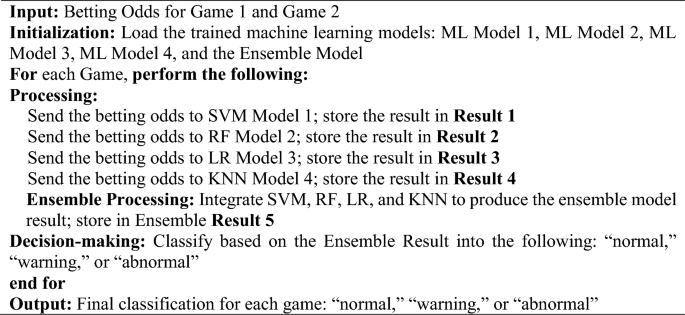

This research employed 4 fashions: assist vector machine (SVM), random forest (RF), logistic regression (LR), and k-nearest neighbor (KNN), identified for his or her strong efficiency in classifying regular and irregular video games primarily based on win odds, tie odds, and lose odds patterns. Instead of solely counting on the patterns of regular and irregular video games recognized by these 4 distinct machine-learning fashions, we additional built-in them into an ensemble mannequin by aggregating their parameters. By pooling the predictions of all 5 fashions (the unique 4 plus the ensemble) by means of a voting mechanism, we categorized video games into three distinct patterns: “normal,” “warning,” and “abnormal,” primarily based on the collective consensus of those fashions, The betting mannequin of this research may be described by Algorithm 1 as follows: A complete of 5 fashions have been used to detect irregular video games, together with 4 particular person machine studying fashions and one ensemble mannequin. The ensemble mannequin was primarily based on the parameters of the opposite 4 fashions. To decide the authenticity of a sport, the outcomes of all 5 fashions have been aggregated. Furthermore, a sport was categorized into one of many following three classifications: “normal,” “warning,” or “abnormal,” primarily based on the variety of fashions that recognized the sport as probably fraudulent. This complete dataset allowed us to determine patterns related to irregular matches, thereby enabling the classification mannequin to study and distinguish between regular and irregular labels. Hence, the classification mannequin was employed as a way to successfully analyze and comprehend the intricacies throughout the dataset. The knowledge used for classification have been employed to determine patterns of win odds, tie odds, and loss odds noticed in soccer matches, utilizing the proposed technique. These patterns have been then transformed into particular values and utilized within the classification course of. Thus, a selected sample of odds in soccer matches served as a mannequin variable.

Ensemble course of for detecting irregular video games

Developed a classy multimodal synthetic intelligence mannequin designed to observe and analyze various kinds of knowledge for anomaly detection. The mannequin has a course of, proven in Fig. 2, that integrates enter from a number of sources and makes use of an ensemble method the place every submodel is specialised for a selected knowledge sort. The system combines insights from these submodels to evaluate the general state of affairs and categorizes the outcomes into completely different classes. The decision-making course of is predicated on a consensus mechanism28. If the vast majority of sub-models flag an occasion as suspicious, the occasion is labeled as ‘abnormal.’ Consequently, the built-in mannequin is able to distinguishing between ‘normal’ and ‘abnormal’ outcomes with excessive accuracy. To present extra nuanced insights, the mannequin categorizes the anomalies into three ranges.

To illustrate the general means of the mannequin, need to detect anomalies within the odds knowledge of a single match. Therefore, the percentages knowledge is classed into 5 fashions: 4 skilled fashions and an ensemble mannequin of 4 fashions. At this time, one odds knowledge is enter to 5 fashions as an enter worth, and every of the 5 fashions that obtained the information is judged as regular or irregular, and 5 outcomes are derived. At this time, if the rely of Abnormal is 3 or extra, it’s Abnormal, 2 is Caution, and 1 or much less is Normal. Therefore, every of the 5 classification fashions derives two prediction labels, however the general mannequin counts two prediction labels and derives a complete of three outcomes.

-

1.

Normal: If the ‘Abnormal Count,’ which represents the variety of sub-models indicating an anomaly, is lower than 1, the state of affairs is judged as regular, indicating typical and protected operational situations.

-

2.

Caution: If the ‘Abnormal Count’ is strictly 2, it signifies a necessity for warning. This degree means that there may be potential points or rising dangers that require nearer monitoring or preventive measures.

-

3.

Abnormal: If the ‘Abnormal Count’ is 3 or extra, the state of affairs is judged as irregular. This classification signifies a excessive probability of a big situation or anomaly that wants rapid consideration and presumably corrective motion.

This complete dataset allowed us to determine patterns related to irregular matches, thereby enabling the classification mannequin to study and distinguish between regular and irregular labels. Hence, the classification mannequin was employed as a way to successfully analyze and comprehend the intricacies throughout the dataset. The knowledge used for classification have been employed to determine patterns of win odds, tie odds, and loss odds noticed in soccer matches, utilizing the proposed technique. These patterns have been then transformed into particular values and utilized within the classification course of.

Support vector machine

An SVM is a knowledge classification mannequin that makes use of a choice boundary to separate the information area into two disjoint half properties. New enter knowledge are categorised primarily based on their similarity to certainly one of these properties. The bigger the boundary knowledge hole, the extra correct the classification mannequin. It is, subsequently, widespread to arrange random outliers on either side of the choice boundary, often known as margins. In this research, a most margin was created to reinforce classification accuracy, and the information coming into the margin have been eradicated29.

The SVM algorithm on the p-dimensional hyperplane is proven in Eq. (5), with (fleft( X proper) = 0).

$$fleft( X proper) = beta_{0} + beta_{1} X_{1} + cdots + beta_{p} X_{p}$$

(5)

$$fleft( X proper) = 0$$

(6)

The (fleft( X proper)) worth on the hyperplane is 1 (Class1) if (fleft( {X_{i} } proper) > 0), in any other case − 1 (Class2) if ((fleft( {X_{i} } proper) < 0)). Data have been thought of effectively sorted when the worth of Eq. (7) was constructive, following (Y_{i}) on (− 1, 1).

$$Y_{i} left( {beta_{0} + beta_{1} X_{i1} + cdots + beta_{p} X_{ip} } proper) > 0$$

(7)

With a hyperplane, as proven in Eq. (7), the information may be divided by completely different angles. However, for a classification mannequin to be extremely correct, the hyperplane must be optimized by maximizing the margin between completely different knowledge factors. This results in discovering the utmost “M” (margin), as proven in Eq. (9). Consequently, the hyperplane and margin are designated whereas permitting errors (in_{i}) to a point, earlier than eliminating all knowledge contained in the margin as outliers.

$$beta_{0} ,beta_{1} , ldots ,beta_{p} ,; in_{1} , ldots , in_{n} MMaximizeM$$

(8)

$$topic to mathop sum limits_{j = 1}^{p} ;;beta_{j}^{2} = 1$$

(9)

$$Y_{i} left( {beta_{0} + beta_{1} X_{i1} + cdots + beta_{p} X_{ip} } proper) ge Mleft( {1 – in_{1} } proper)$$

(10)

$$in_{1} ge 0, mathop sum limits_{i = 1}^{n} ;; in_{i} le C$$

(11)

For SVM mannequin, the C(Regularization Strength) worth is 0.1 to stop overfitting, and for the reason that values of the information are linear, the kernel is linear, and irregular matches of the percentages don’t have common options, so RBF(Radial Basis Function) is adopted to derive such non-linear options30.

Random forest

In the RF mannequin, choice timber—the hierarchical construction composed of nodes and edges that join nodes—assist decide the optimum outcome. A choice tree rotationally splits studying knowledge into subsets. This rotation-based division repeats on the divided subsets till there isn’t a extra predictive worth left, or the subset node’s worth turns into an identical to the goal variable. This process is called the top-down induction of choice timber (TDIDT), through which the dependent variable Y serves because the goal variable within the classification; moreover, vector v is expressed by Eq. (12).

$$left( {v, Y} proper) = left( {x_{1} ,x_{2} , ldots ,x_{d} , Y} proper)$$

(12)

While classifying knowledge utilizing TDIDT, Gini impurity could also be used to measure misclassified knowledge in a set. While randomly estimating the category, a set with a probability of misjudgment close to 0 is claimed to be pure. Therefore, Gini impurity enhances the accuracy of the RF mannequin31.

$$I_{G} left( f proper) = mathop sum limits_{i = 1}^{m} f_{i} left( {1 – f_{i} } proper) = mathop sum limits_{i = 1}^{m} left( {f_{i} – f_{i}^{2} } proper) = mathop sum limits_{i = 1}^{m} f_{i} – mathop sum limits_{i = 1}^{m} f_{i}^{2} = 1 – mathop sum limits_{i = 1}^{m} f_{i}^{2}$$

(13)

Trees are skilled to optimize cut up operate parameters associated to inner nodes, in addition to end-node parameters, to reduce outlined goal features when v (knowledge), S0 (skilled set), and actual knowledge labels are offered. The RF mannequin optimizes and averages the choice tree outcomes utilizing the bagging technique earlier than classification. Bagging or bootstrap aggregation—which means concurrently bootstrapping a number of samples and aggregating outcomes from machine studying—is a technique that averages numerous fashions to determine the optimized model.

Since the variety of timber determines the efficiency and accuracy of the LF mannequin, we ran Gridsearch with growing numbers of timber, and located that the most effective efficiency was achieved with 50 timber. We additionally set the ratio to 0.4 to find out the utmost variety of options within the tree, and the utmost depth of the tree to 10 to stop overfitting32.

Logistic regression

LR is a supervised studying mannequin that predicts the likelihood of given knowledge belonging to a sure vary between 0 and 1. The goal variable is binary: 0–0.5 and 0.5–1. LR is linear, and every function worth multiplied by a coefficient and added by the intercept offers log-odds in opposition to the anticipated worth, enabling knowledge classification. Therefore, the likelihood (P) of the occasion occurring or not occurring was calculated, and the log of the percentages was calculated for the classification by means of the ultimate worth33.

$$Odds = frac{{Pleft( {occasion occurring} proper)}}{{Pleft( {occasion not occurring} proper)}}$$

(14)

To consider the suitability of the outcomes to the mannequin, we should calculate and common the lack of the pattern. This is known as log loss, expressed in Eq. (15), which comprises the next components: m = complete variety of knowledge factors, y(i) = class for knowledge i, zi = log-odd of knowledge i, and h(z(i)) = log-odds sigmoid that identifies a coefficient minimizing log loss, which supplies the optimized mannequin.

$$- frac{1}{m}mathop sum limits_{i = 1}^{m} left[ {y^{left( i right)} loglog left( {hleft( {z^{left( i right)} } right)} right) + left( {1 – y^{left( i right)} } right)logleft( {1 – hleft( {z^{left( i right)} } right)} right)} right]$$

(15)

Once log-odds or property coefficient values have been calculated, they could possibly be utilized to the sigmoid operate to calculate the result of the information, ranging between 0 and 1 and belonging to a given class. In this research, a loss operate was used to determine values close to 0 or 1, to kind regular and irregular matches.

In this research, to search out the optimum hyperparameters for every of the 4 fashions, we used Gridsearch to fine-tune the weights of every mannequin and choose the mannequin with the optimum accuracy. For the LR mannequin, the C(Regularization Strength) worth was set to 0.1 to stop overfitting, and to normalize the information values, Lasso regression evaluation was adopted, which may effectively choose the stream of a selected match, and regularization was carried out utilizing liblinear, which is appropriate for small datasets for optimization34.

Ok-nearest neighbor

KNN is a classification algorithm of KNNs, primarily based on their knowledge label, utilizing the Euclidean distance method to judge the gap. Based on the Euclidean distance, d (distance) between A (x1, y1) and B (x2, y2) in a two-dimensional land is proven in Eq. (16).

$$dleft( {A,B} proper) = sqrt {(x_{2} – x_{1} )^{2} + (y_{2} – y_{1} )^{2} }$$

(16)

To distinguish between regular and irregular matches, the present research designated okay as 2 and cut up array figures into regular or irregular matches utilizing the betting odds sample acceptable for every match35. In this research, we set okay = 2, because it includes the classification of two lessons: regular and irregular. Consequently, the problem of ties can come up when an equal variety of nearest neighbors belong to completely different lessons. To tackle this problem, the evaluation was carried out by augmenting mannequin stability by means of the utilization of k-fold cross-validation. This method allows the analysis of each the accuracy and stability of the classification mannequin, making certain a extra strong and dependable classification consequence for circumstances through which okay = 2. After figuring out the betting odds of a brand new match, the match array sample allowed us to find out whether or not it was extra regular or irregular. For the KNN mannequin, Gridsearch was performed by adjusting the preliminary okay worth, and in consequence, okay = 2 was lastly adopted. In addition, Manhattan distance, Minkowski distance, and Euclidean distance have been used as distance metrics, however basic Euclidean distance was adopted because of the complicated nature of the information and the small variety of knowledge36.

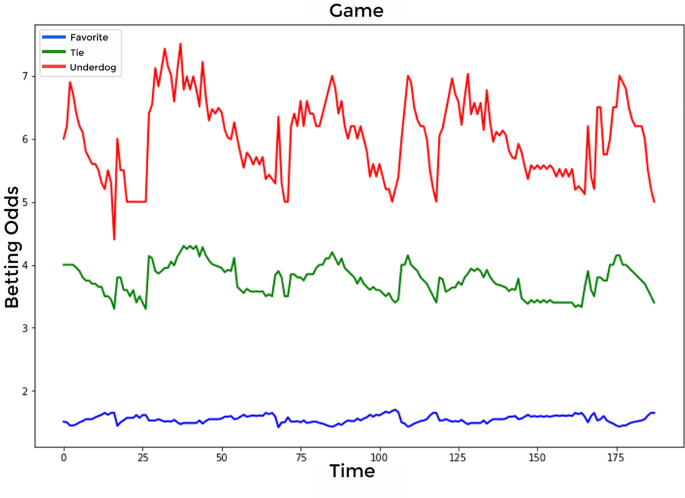

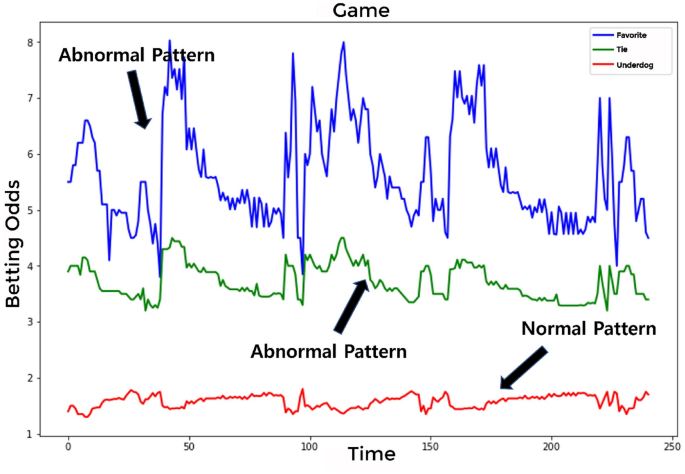

Data preprocessing

This research used hourly win-tie-loss betting odds knowledge to categorise irregular and regular matches. Ok-league soccer matches and match-fixing circumstances between 2000 and 2020 have been used as knowledge sources. The coaching knowledge, spanning 20 years, is derived from Ok-League soccer matches, the place every of the 12 groups performs 33 video games. However, the dataset initially had a better rely of matches. Among these, a subset of matches was recognized as having static knowledge, characterised by minimal motion in betting odds on account of low betting volumes. These matches have been excluded from our evaluation as a result of their static nature doesn’t present helpful insights for figuring out betting developments. Consequently, the refined dataset for coaching consists of two,607 knowledge factors. These factors signify matches that attracted a big variety of bets, making them extra related for our evaluation in understanding betting patterns and developments. The studying knowledge have been primarily based on 2586 regular and 21 irregular matches. The matched dividend knowledge are proven in Fig. 3. On the x-axis, representing “Time,” a worth was assigned to every time stream. The win-tie-loss betting odds worth was represented on the y-axis. Figure 3 is an instance of the time collection stream of odds for one out of 2607 video games. In Fig. 3, the x-axis represents the “Favorite” betting odds, suggesting the likelihood of a group enjoying of their native stadium or one near their dwelling base. Conversely, “Underdog” denotes the betting odds of a group enjoying in an unfamiliar setting, probably impacted by varied elements akin to completely different discipline dimensions, enjoying surfaces, and atmospheric situations. The “Tie” on the y-axis signifies the betting odds of each groups tying on account of an an identical rating within the match. Additionally, the x-axis represents the “Time” worth in minutes. Thus, the evolution of betting odds is depicted as a time collection, capturing the percentages each earlier than the soccer sport started and because it progressed.

For knowledge choice, utilizing matches not recognized as irregular might end in an inaccurate mannequin. Therefore, solely matches confirmed as precise situations of abnormality within the Ok-League have been examined and utilized for coaching as irregular circumstances.

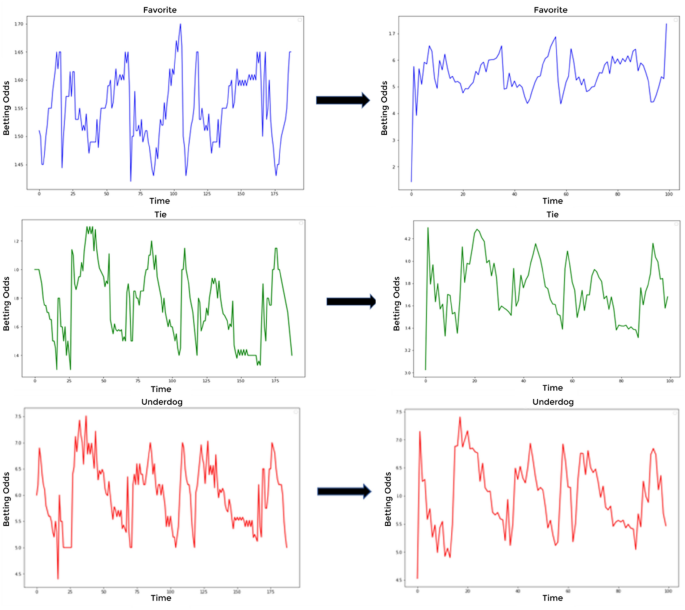

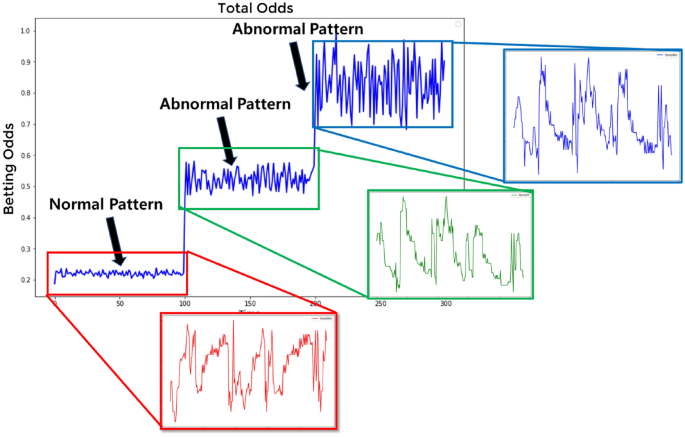

Before studying, we checked whether or not the betting odds knowledge and size of every match have been irregular. For occasion, there could also be 50 knowledge factors for match A and 80 factors for match B. In such a case, the distinction in knowledge dimensions hinders the mannequin’s studying course of. Therefore, knowledge dimensions must be evened earlier than studying. Given the common knowledge size of 80 to 100, the size of each dividend datum was adjusted to 100 in an analyzable kind, earlier than smoothing and implementation by including a Sin worth. Figure 4 reveals the information dimension adjustment to 100 with out altering the general betting odds graph sample and the appliance of the Sin-based smoothing. Superimposing a Sin wave onto our adjusted knowledge enabled us to focus on potential periodicities and improve the mannequin’s potential to seize these recurrent patterns. The Sin-based smoothing method, when post-data dimension changes are utilized, emerges as an instrumental method, not solely making certain the mitigation of unwarranted noise and fluctuations but in addition amplifying latent periodic developments, thereby selling knowledge uniformity throughout matches. This, in flip, cultivates an setting conducive for fashions to discern principal developments over outliers and enhances their functionality to generalize throughout numerous and unseen datasets, fortifying their general predictive proficiency37.

With the adjusted dimension of win-tie-loss betting odds knowledge, Fig. 5 represents an irregular match throughout studying, with no change in a given dividend. As proven in Fig. 4, every dimension was adjusted to the win-tie-loss betting odds knowledge. When studying every of the win-tie-loss betting odds, Fig. 5 represents an irregular match with no change in a given dividend, even for an irregular match. However, its loss sample may be thought of a traditional match. Consequently, the educational mannequin may be thought of a traditional match when three completely different patterns are utilized concurrently.

To tackle this downside, datasets on win-tie-loss with a size of 100 every have been transformed to border a single dataset of 300 in size. Figure 6 reveals the outcome. Three forms of betting odds, proven in Fig. 5, have been mixed to kind a sample, which in flip emphasised the traits of data-deprived irregular matches throughout studying.

Abnormal betting detection mannequin

An irregular match detection mannequin was developed primarily based on the information evaluation outcomes of every mannequin, utilizing the match outcome dividend knowledge. Based on Fig. 7, the method begins with the real-time entry of knowledge for every odds charge throughout a sports activities sport. This enter contains three odds classes: Favorite, Tie, and Underdog. Subsequently, the enter knowledge undergoes a change by means of the Pattern Combine technique proposed on this research, ensuing within the era of a brand new sample. This newly derived sample is then fed into the 5 studying fashions. Each mannequin individually classifies video games as regular or irregular and offers corresponding outcomes. Consequently, the outcomes of the betting patterns are obtained for every mannequin, enabling a complete analysis of their efficiency. Based on the betting sample evaluation outcomes from the 5 fashions, the irregular betting detection mannequin categorised matches in accordance with the variety of irregular matches as follows: one or much less, regular; two, warning; three, hazard; and 4 or extra, irregular. Figure 7 reveals the fashions’ classification course of, which offers a dividend sample to assist detect irregular matches. In abstract, we discovered 4 machine studying fashions and created an ensemble mannequin utilizing the parameters of those 4 fashions, in the end creating a complete of 5 fraud detection fashions. We categorised matches as regular, warning, harmful, or irregular primarily based on the variety of irregular matches detected by all 5 fashions, somewhat than judging irregular matches primarily based on every mannequin’s outcomes.

Data evaluation

The current research proceeded with machine studying utilizing 5 performant multiclass fashions: LR, RF, SVM, KNN, and the ensemble mannequin, which was an optimized model of the earlier 4 fashions. This was used to categorise regular and irregular matches by studying their sample from sports activities betting odds knowledge. This research utilized the win, tie, and loss odds estimated by the iSports API utilizing the 31 variables offered in Table 1.

Classification utilizing the 4 fashions and one ensemble mannequin used within the evaluation reveals excessive efficiency in judging knowledge akin to odds that don’t have many variables. The accuracy of the coaching knowledge for every mannequin was 95% on common, and the loss worth was 0.05 on common, which is a excessive accuracy for the coaching knowledge. Therefore, the mannequin was adopted to detect match-fixing.

As these 31 variables have an effect on the result of a soccer sport, they weren’t instantly employed as knowledge; somewhat, their affect was mirrored within the derived odds. Therefore, the percentages variables for wins, ties, and losses have been employed on this research. The dataset was sorted chronologically for wins, ties, and losses, no matter CompanyID (a variable used to distinguish and categorize the betting corporations), and in circumstances of an identical timestamps, averages have been utilized. Table 2 offers a proof of the information subset. We collected betting knowledge from three days earlier than the beginning of the sport till the top of the sport. Data assortment occurred at any time when there was a change in Favorite, Tie, or Underdog betting knowledge, with out specifying a set time interval. Table 2 offers an in depth description of the variables used on this context. ScheduleID is a variable used to distinguish and determine particular matches. It permits us to tell apart particulars such because the match date and the groups concerned within the sport. In this analysis, CompanyID was utilized as a variable to tell apart amongst 12 completely different betting corporations. Favorite, Tie, and Underdog signify betting knowledge for wins, ties, and losses, primarily based on the house group. These variables represent the first knowledge used on this research, reflecting real-time adjustments in betting knowledge. ModifyTime is a variable that information the time when knowledge adjustments occurred. For instance, if there have been adjustments in betting knowledge from Company A, the modified Favorite, Tie, and Underdog knowledge can be recorded together with the time of the modification. If Company A skilled adjustments in betting knowledge whereas Company B didn’t, solely the modified betting knowledge from Company A can be recorded.

This research course of includes testing 5 fashions utilizing a dataset that consists of 2607 objects. This dataset is utilized for studying functions, comprising 2586 regular matches and 21 irregular matches. For the validation section, a separate set of 20 matches is employed, which is evenly divided into 10 regular and 10 irregular matches. This setup ensures that the fashions are each skilled on a complete dataset after which precisely validated utilizing a balanced mixture of regular and irregular match knowledge. Acknowledge that the dataset in our research could also be perceived as restricted in amount; nonetheless, as we take care of betting knowledge on uncommon matches, in observe, we can not use knowledge with out verified situations of matches with unlawful odds for coaching. This is as a result of if the mannequin is skilled with irregular match odds which can be really from a traditional match, there’s a downside. Therefore, solely knowledge verified with precise circumstances have been used. Although the dimensions of the educational dataset is small, it comprises all of the patterns of unlawful/irregular video games that happen inside it; subsequently, it represents the phenomenon or sample studied on this analysis. The RF, KNN, and ensemble fashions recorded a excessive accuracy of over 92%, whereas the LR and SVM fashions have been roughly 80% correct (Table 3).

[adinserter block=”4″]

[ad_2]

Source link