[ad_1]

For the last two years, Facebook AI Research (FAIR) has worked with 13 universities around the world to assemble the largest ever dataset of first-person video—specifically to train deep-learning image-recognition models. AIs trained on the dataset will be better at controlling robots that interact with people, or interpreting images from smart glasses. “Machines will be able to help us in our daily lives only if they really understand the world through our eyes,” says Kristen Grauman at FAIR, who leads the project.

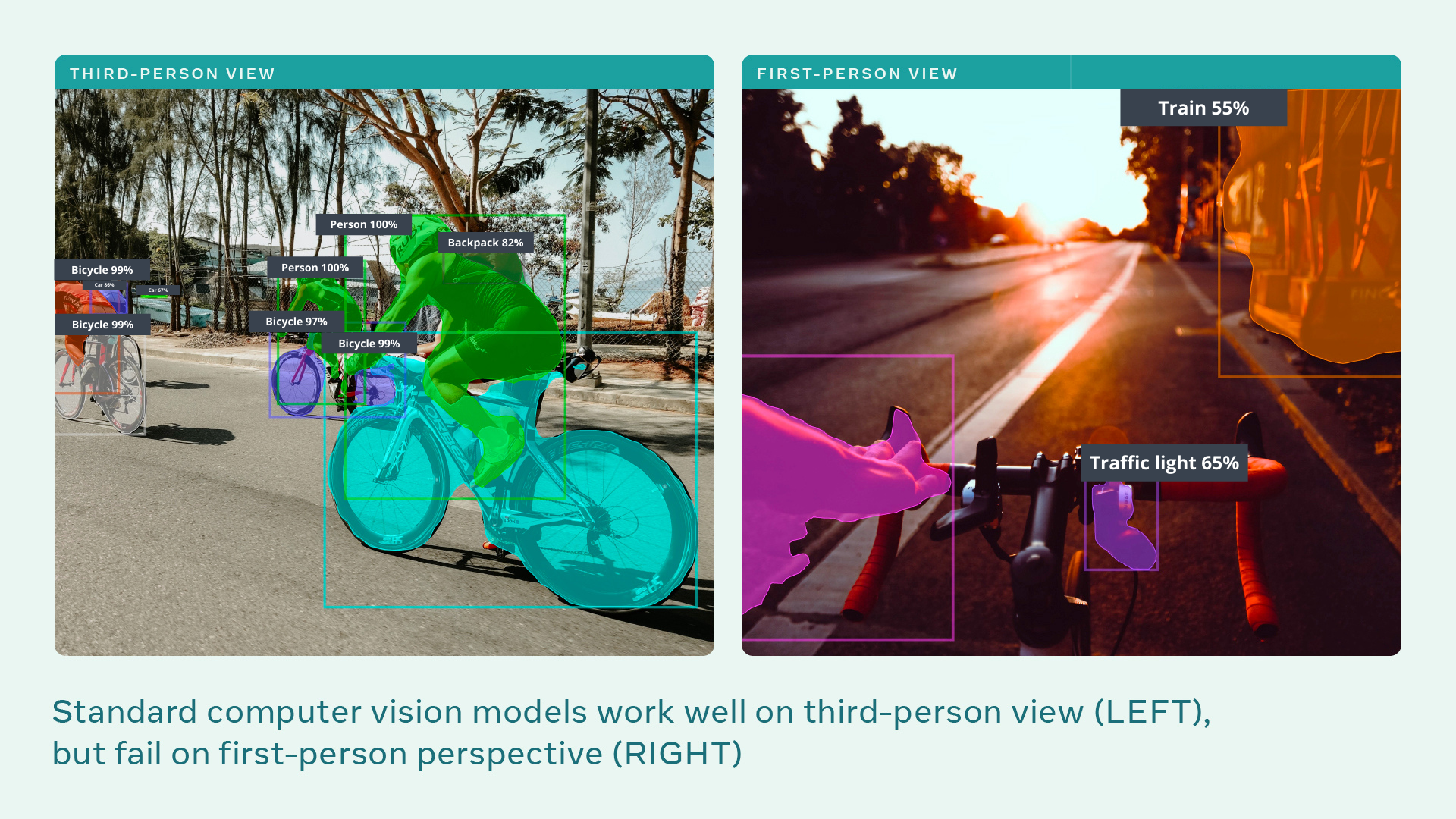

Such tech could support people who need assistance around the home, or guide people in tasks they are learning to complete. “The video in this dataset is much closer to how humans observe the world,” says Michael Ryoo, a computer vision researcher at Google Brain and Stony Brook University in New York, who is not involved in Ego4D.

But the potential misuses are clear and worrying. The research is funded by Facebook, a social media giant that has recently been accused in the Senate of putting profits over people’s wellbeing, a sentiment corroborated by MIT Technology Review’s own investigations.

The business model of Facebook, and other Big Tech companies, is to wring as much data as possible from people’s online behavior and sell it to advertisers. The AI outlined in the project could extend that reach to people’s everyday offline behavior, revealing the objects around a person’s home, what activities she enjoyed, who she spent time with, and even where her gaze lingered—an unprecedented degree of personal information.

“There’s work on privacy that needs to be done as you take this out of the world of exploratory research and into something that’s a product,” says Grauman. “That work could even be inspired by this project.”

Ego4D is a step-change. The biggest previous dataset of first-person video consists of 100 hours of footage of people in the kitchen. The Ego4D dataset consists of 3025 hours of video recorded by 855 people in 73 different locations across nine countries (US, UK, India, Japan, Italy, Singapore, Saudi Arabia, Colombia and Rwanda).

The participants had different ages and backgrounds; some were recruited for their visually interesting occupations, such as bakers, mechanics, carpenters, and landscapers.

Previous datasets typically consist of semi-scripted video clips only a few seconds long. For Ego4D, participants wore head-mounted cameras for up to 10 hours at a time and captured first-person video of unscripted daily activities, including walking along a street, reading, doing laundry, shopping, playing with pets, playing board games, and interacting with other people. Some of the footage also includes audio, data about where the participants’ gaze was focused, and multiple perspectives on the same scene. It’s the first dataset of its kind, says Ryoo.

[ad_2]

Source link