[ad_1]

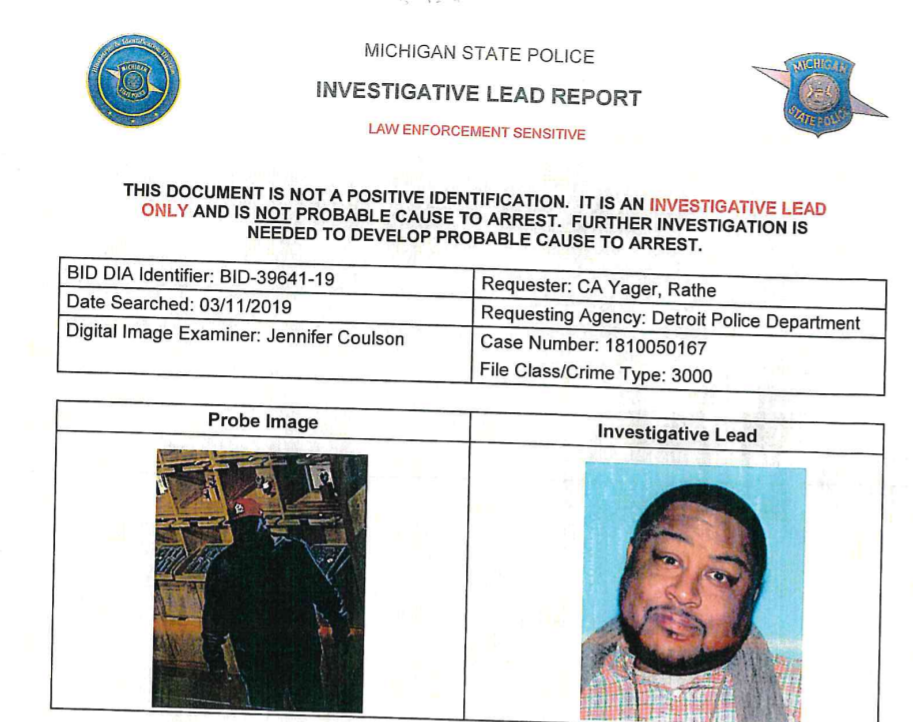

A photo of the alleged suspect in a theft case in Detroit, left, next to the driver’s license photo of Robert Williams. An algorithm said Williams was the suspect, but he and his lawyers say the tool produced a false hit.

ACLU of Michigan

hide caption

toggle caption

ACLU of Michigan

A photo of the alleged suspect in a theft case in Detroit, left, next to the driver’s license photo of Robert Williams. An algorithm said Williams was the suspect, but he and his lawyers say the tool produced a false hit.

ACLU of Michigan

Police in Detroit were trying to figure out who stole five watches from a Shinola retail store. Authorities say the thief took off with an estimated $3,800 worth of merchandise.

Investigators pulled a security video that had recorded the incident. Detectives zoomed in on the grainy footage and ran the person who appeared to be the suspect through facial recognition software.

A hit came back: Robert Julian-Borchak Williams, 42, of Farmington Hills, Mich., about 25 miles northwest of Detroit.

In January, police pulled up to Williams’ home and arrested him while he stood on his front lawn in front of his wife and two daughters, ages 2 and 5, who cried as they watched their father being placed in the patrol car.

His wife, Melissa Williams, asked where her husband was being taken.

” ‘Google it,’ ” she remembers an officer telling her.

Robert Williams was placed in an interrogation room and police put three photos in front of him: Two photos taken from the surveillance camera in the store and a photo of Williams’ state-issued driver’s license.

“When I look at the picture of the guy, I just see a big Black guy. I don’t see a resemblance. I don’t think he looks like me at all,” Williams said in an interview with NPR.

“[The detective] flips the third page over and says, ‘So I guess the computer got it wrong, too.’ And I said, ‘Well, that’s me,’ pointing at a picture of my previous driver’s license,” Williams said of the interrogation with detectives. ” ‘But that guy’s not me,’ ” he said, referring to the other photographs.

“I picked it up and held it to my face and told him, ‘I hope you don’t think all black people look alike,’ ” Williams said.

Williams was detained for 30 hours and then released on bail until a court hearing on the case, his lawyers say.

At the hearing, a Wayne County prosecutor announced that the charges against Williams were being dropped.

Civil rights experts say Williams is the first known example of an American wrongfully arrested based on a false hit produced by facial recognition technology.

Lawyer: artificial intelligence ‘framed and informed everything’

What makes Williams’ case extraordinary is that police admitted that facial recognition technology, conducted by Michigan State Police in a crime lab at the request of the Detroit Police Department, prompted the arrest, according to charging documents reviewed by NPR.

The pursuit of Williams as a possible suspect came despite repeated claims by him and his lawyers that the match produced by artificial intelligence was faulty.

The alleged suspect in the security camera image was wearing a red St. Louis Cardinals hat. Williams, a Detroit native, said he would under no circumstances be wearing that hat.

“They never even asked him any questions before arresting him. They never asked him if he had an alibi. They never asked if he had a red Cardinals hat. They never asked him where he was that day,” said lawyer Phil Mayor with the ACLU of Michigan.

On Wednesday, the ACLU of Michigan filed a complaint against the Detroit Police Department asking that police stop using the software in investigations.

In a statement to NPR, the Detroit Police Department said after the Williams case, the department enacted new rules. Now, only still photos, not security footage, can be used for facial recognition. And it is now used only in the case of violent crimes.

“Facial recognition software is an investigative tool that is used to generate leads only. Additional investigative work, corroborating evidence and probable cause are required before an arrest can be made,” Detroit Police Department Sgt. Nicole Kirkwood said in a statement.

In Williams’ case, police had asked the store security guard, who had not witnessed the robbery, to pick the suspect out of a photo lineup based on the footage, and the security guard selected Williams.

Victoria Burton-Harris, Williams’ lawyer, said in an interview that she is skeptical that investigators used the facial recognition software as only one of several possible leads.

“When that technology picked my client’s face out, from there, it framed and informed everything that officers did subsequently,” Burton Harris said.

Academic and government studies have demonstrated that facial recognition systems misidentify people of color more often than white people.

Williams: “Let’s say that this case wasn’t retail fraud. What if it’s rape or murder?”

According to Georgetown Law’s Center on Privacy and Technology, at least a quarter of the nation’s law enforcement agencies have access to face recognition tools.

“Most of the time, people who are arrested using face recognition are not told face recognition was used to arrest them,” said Jameson Spivack, a researcher at the center.

While Amazon, Microsoft and IBM have announced a halt to sales of face recognition technology to law enforcement, Spivack said that will have little effect, since most major facial recognition software contracts with police are with smaller, more specialized companies, like South Carolina-based DataWorks Plus, which is the company that supplied the Detroit Police Department with its face-scanning software.

The company did not respond to an interview request.

DataWorks Plus has supplied the technology to government agencies in Santa Barbara, Calif., Chicago and Philadelphia.

Facial recognition technology is used by consumers everyday to unlock their smartphones or to tag friends on social media. Some airports use the technology to scan passengers before they board flights.

Its deployment by governments, though, has drawn concern from privacy advocates and experts who study the machine learning tool and have highlighted its flaws.

“Some departments of motor vehicles will use facial recognition to detect license fraud, identity theft, but the most common use is law enforcement, whether it’s state, local or federal law enforcement,” Spivack said.

The government use of facial recognition technology has been banned in half a dozen cities.

In Michigan, Williams said he hopes his case is a wake-up call to lawmakers.

“Let’s say that this case wasn’t retail fraud. What if it’s rape or murder?

Would I have gotten out of jail on a personal bond, or would I have ever come home?” Williams said.

Williams and his wife, Melissa, worry about the long-term effects the arrest will have on their two young daughters.

“Seeing their dad get arrested, that was their first interaction with the police. So it’s definitely going to shape how they perceive law enforcement,” Melissa Williams said.

In his complaint, Williams and his lawyers say if the police department won’t ban the technology outright, then at least his photo should be removed from the database, so this doesn’t happen again.

“If someone wants to pull my name and look me up,” Williams said, “who wants to be seen as a thief?”

[ad_2]

Source link